Iceberg: A loudspeaker-based room

auralization method for auditory

research

Sergio Luiz Aguirre

Submitted in fulfilment of the requirements for the degree of

Doctor of Philosophy

Hearing Sciences – Scottish Section - School of Medicine

University of Nottingham

Supervised by William M. Whitmer, Lars Bramsløw, &

Graham Naylor

2022

There is no “nonspatial hearing” — Jens Blauert

Abstract

Depending on the acoustic scenario, people with hearing loss are challenged

on a different scale than normal hearing people to comprehend sound, espe-

cially speech. That happen especially during social interactions within a group,

which often occurs in environments with low signal-to-noise ratios. This com-

munication disruption can create a barrier for people to acquire and develop

communication skills as a child or to interact with society as an adult. Hear-

ing loss compensation aims to provide an opportunity to restore the auditory

part of socialization. Technology and academic efforts progressed to a bet-

ter understanding of the human hearing system. Through constant efforts

to present new algorithms, miniaturization, and new materials, constantly-

improving hardware with high-end software is being developed with new fea-

tures and solutions to broad and specific auditory challenges. The effort to

deliver innovative solutions to the complex phenomena of hearing loss encom-

passes tests, verifications, and validation in various forms. As the newer de-

vices achieve their purpose, the tests need to increase the sensitivity, requiring

conditions that effectively assess their improvements.

Regarding realism, many levels are required in hearing research, from pure

tone assessment in small soundproof booths to hundreds of loudspeakers com-

bined with visual stimuli through projectors or head-mounted displays, light,

and movement control. Hearing aids research commonly relies on loudspeaker

setups to reproduce sound sources. In addition, auditory research can use

well-known auralization techniques to generate sound signals. These signals

can be encoded to carry more than sound pressure level information, adding

spatial information about the environment where that sound event happened

or was simulated. This work reviews physical acoustics, virtualization, and

auralization concepts and their uses in listening effort research. This knowl-

edge, combined with the experiments executed during the studies, aimed to

provide a hybrid auralization method to be virtualized in four-loudspeaker se-

tups. Auralization methods are techniques used to encode spatial information

into sounds. The main methods were discussed and derived, observing their

spatial sound characteristics and trade-offs to be used in auditory tests with

one or two participants. Two well-known auralization techniques (Ambisonics

and Vector-Based Amplitude Panning) were selected and compared through

a calibrated virtualization setup regarding spatial distortions in the binau-

ral cues. The choice of techniques was based on the need for loudspeakers,

although a small number of them. Furthermore, the spatial cues were exam-

ined by adding a second listener to the virtualized sound field. The outcome

reinforced the literature around spatial localization and these techniques driv-

ing Ambisonics to be less spatially accurate but with greater immersion than

Vector-Based Amplitude Panning.

A combination study to observe changes in listening effort due to different

signal-to-noise ratios and reverberation in a virtualized setup was defined. This

i

experiment aimed to produce the correct sound field via a virtualized setup

and assess listening effort via subjective impression with a questionnaire, an

objective physiological outcome from EEG, and behavioral performance on

word recognition. Nine levels of degradation were imposed on speech signals

over speech maskers separated in the virtualized space through Ambisonics’

first-order technique in a setup with 24 loudspeakers. A high correlation be-

tween participants’ performance and their responses on the questionnaire was

observed. The results showed that the increased virtualized reverberation time

negatively impacts speech intelligibility and listening effort.

A new hybrid auralization method was proposed merging the investigated tech-

niques that presented complementary spatial sound features. The method was

derived through room acoustics concepts and a specific objective parameter

derived from the room impulse response called Center Time. The verification

around the binaural cues was driven with three different rooms (simulated).

As the validation with test subjects was not possible due to the COVID-19

pandemic situation, a psychoacoustic model was implemented to estimate the

spatial accuracy of the method within a four-loudspeaker setup. Also, an in-

vestigation ran the same verification, and the model estimation was performed

with the introduction of hearing aids. The results showed that it is possible

to consider the hybrid method with four loudspeakers for audiological tests

while considering some limitations. The setup can provide binaural cues to a

maximum ambiguity angle of 30 degrees in the horizontal plane for a centered

listener.

ii

Acknowledgements

Thank you,

Obrigado, Gracias, Grazie, Tak skal du have, Dank u zeer & Danke sehr

Firstly, I would like to express my gratitude to my supervisors, Drs. Bill Whit-

mer, Lars Bramsløw, and Graham Naylor, for their guidance, expertise, and

remarkable patience throughout this process. Your support and mentorship

have been invaluable in helping me to fill my knowledge gaps, tirelessly encour-

aging me to ask the right questions, and guiding me to produce high-quality

scientific research. I also thank Dr. Thomas Lunner for his initial guidance,

insightful questions, and comments.

Thank you to the special people at Eriksholm Research Centre in Denmark

and the people at Hearing Sciences – Scottish Section. Working with such

fantastic top teams has been a joy and a privilege. A special thank you to Jette,

Michael, Bo, Niels, Claus, Dorothea, Sergi, Jeppe, James, Lorenz, Johannes,

and Hamish. Thanks to all the people involved in HEAR-ECO for their hard

work, especially Hidde, Beth, Patrycja, Tirdad, and Defne.

I am deeply grateful to my sweet wife, Lilian, for the love, encouragement, and

support she has given me throughout this journey. Her unwavering support

has been an enduring seed of resilience and inspiration. I cannot thank her

enough for being such an integral part of my life.

Thanks to my friends Math and Gil, for always being there. Special thanks to

my former professors, Drs.: Arcanjo Lenzi, William D’Andrea Fonseca, Eric

Brand˜ao, Paulo Mareze, Stephan Paul, and Bruno Sanches Masiero for stimu-

lating critical thinking and for all the support, knowledge, and encouragement.

Thank you also to my former Oticon Medical colleagues Simon Ziska Krogholt,

Patrick Maas, Brian Skov, and Jens T. Balslev for the and support.

I would like to thank you, my Professor, Professora Dra. Dinara Xavier Paix˜ao.

All of this is possible because of you and your determination to create an

official undergraduate course in Acoustical Engineering in Brazil. This course

iii

is praised for forming remarkable professionals who are recognized worldwide.

It is not just my dream that you have made possible, but the countless people

for whom this course has been a life-changing experience. We know that this

was a collective effort, but your role was vital. Your way of showing that

politics is a part of everything, and that we need to be gentle but correct,

made all the difference. Thank you. Muito Obrigado.

I sincerely thank my friends and colleagues from my undergraduate studies

in acoustical engineering (USFM/EAC) and the master’s (UFSC/LVA). Your

support, happiness, patience and encouragement have been invaluable through-

out this journey. Thank you for helping me to develop my skills and knowledge

and for being such a positive influence on my academic career. I am deeply

grateful for all you have done for me and look forward to continuing our pro-

fessional relationship. I want to thank the oldest friends, Fabrício, André, Juliano,

and the Panteon. I value the bond and history that we share. Thank you for

being such wonderful friends.

I want to express my heartfelt thanks to the Brazilian CNPq and the govern-

ment (Lula/Dilma) policies that support students with low income and from

public schools. With their financial assistance, I could pursue my studies and

achieve my goals. I am deeply grateful for their support and the opportunity to

receive a quality education. I would also like to express my gratitude to Marie

Sk lodowska-Curie Actions for their support of my doctoral education. Their

reference programme for doctoral education has provided me with invaluable

resources and opportunities, and I am extremely grateful for their support.

Thank you for helping me achieve my goals and being a valuable part of my

academic journey.

I want to express my gratitude to all those who will read this thesis in the

future. Your time and attention are greatly appreciated. I wish you a good

reading experience and hope that you will find the ideas and research presented

in this work to be both thought-provoking and beneficial. Thank you again

for considering this work.

iv

Author’s Declaration

This thesis is the result of the author’s original research. Chapter 4 is a

collaboration work with Tirdad Seifi-Ala. The author has composed it and

has not been previously submitted for any other academic qualification.

This project has received funding from the European Union’s Horizon 2020

research and innovation programme under the Marie-Sk lodowska-Curie grant

agreement No 765329; The funder had no role in study design.

Sergio Luiz Aguirre

v

Contents

Abstract i

Acknowledgements iii

Author’s Declaration v

Nomenclature xxi

1 Introduction 1

1.1 Motivations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

1.2 Aims and Scope . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

1.3 Contributions . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

1.4 Organization of the Thesis . . . . . . . . . . . . . . . . . . . . . 4

2 Literature Review 7

2.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

2.2 Human Binaural Hearing . . . . . . . . . . . . . . . . . . . . . . 8

2.2.1 Spatial Hearing Concepts . . . . . . . . . . . . . . . . . 9

2.2.2 Binaural cues . . . . . . . . . . . . . . . . . . . . . . . . 10

2.2.3 Monaural cues . . . . . . . . . . . . . . . . . . . . . . . . 11

vi

CONTENTS vii

2.2.4 Head-related transfer function . . . . . . . . . . . . . . . 12

2.2.5 Subjective aspects of an audible reflection . . . . . . . . 16

2.3 Spatial Sound & Virtual Acoustics . . . . . . . . . . . . . . . . 17

2.3.1 Virtualization . . . . . . . . . . . . . . . . . . . . . . . . 18

2.3.1.1 Auralization . . . . . . . . . . . . . . . . . . . . 19

2.3.1.2 Reproduction . . . . . . . . . . . . . . . . . . . 21

2.3.2 Auralization Paradigms . . . . . . . . . . . . . . . . . . 22

2.3.2.1 Binaural . . . . . . . . . . . . . . . . . . . . . . 22

2.3.2.2 Panorama . . . . . . . . . . . . . . . . . . . . . 23

2.3.2.3 Sound Field Synthesis . . . . . . . . . . . . . . 31

2.3.3 Room acoustics . . . . . . . . . . . . . . . . . . . . . . . 32

2.3.3.1 Room acoustics parameters . . . . . . . . . . . 33

2.3.3.2 Reverberation Time . . . . . . . . . . . . . . . 33

2.3.3.3 Clarity and Definition . . . . . . . . . . . . . . 35

2.3.3.4 Center Time . . . . . . . . . . . . . . . . . . . 37

2.3.3.5 Parameters related to spatiality . . . . . . . . . 37

2.3.4 Loudspeaker-based Virtualization in Auditory Research . 40

2.3.4.1 Hybrid Methods . . . . . . . . . . . . . . . . . 43

2.3.4.2 Sound Source Localization . . . . . . . . . . . . 45

2.4 Listening Effort Assessment . . . . . . . . . . . . . . . . . . . . 50

2.5 Concluding Remarks . . . . . . . . . . . . . . . . . . . . . . . . 53

3 Binaural cue distortions in virtualized Ambisonics and VBAP 55

CONTENTS viii

3.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

3.2 Methods . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

3.2.1 Setups and system characterization . . . . . . . . . . . . 59

3.2.1.1 Reverberation time . . . . . . . . . . . . . . . . 60

3.2.1.2 Early-reflections . . . . . . . . . . . . . . . . . 61

3.2.2 Procedure . . . . . . . . . . . . . . . . . . . . . . . . . . 62

3.2.3 Calibration . . . . . . . . . . . . . . . . . . . . . . . . . 64

3.2.4 VBAP Auralization . . . . . . . . . . . . . . . . . . . . . 67

3.2.5 Ambisonics Auralization . . . . . . . . . . . . . . . . . . 67

3.3 Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

3.3.1 Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

3.3.2 Centered position . . . . . . . . . . . . . . . . . . . . . . 71

3.3.2.1 Centered ITD . . . . . . . . . . . . . . . . . . . 73

3.3.2.2 Centered ILD . . . . . . . . . . . . . . . . . . . 76

3.3.3 Off-centered position . . . . . . . . . . . . . . . . . . . . 82

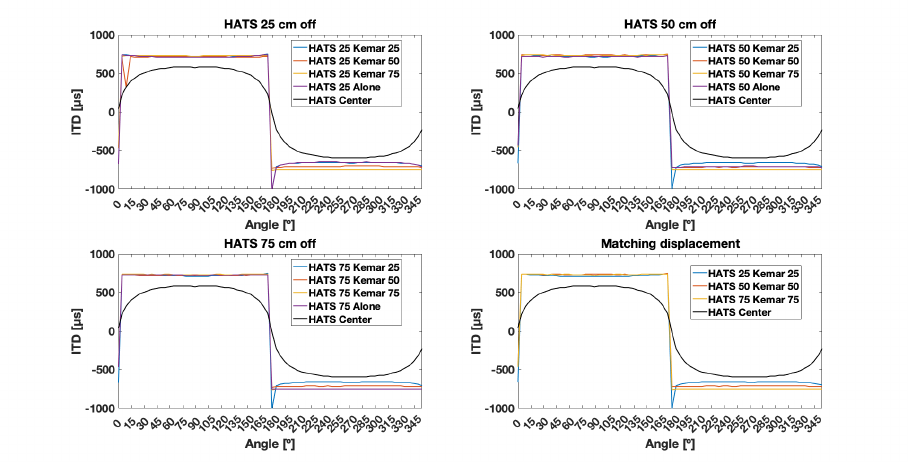

3.3.3.1 Off-center ITD . . . . . . . . . . . . . . . . . . 83

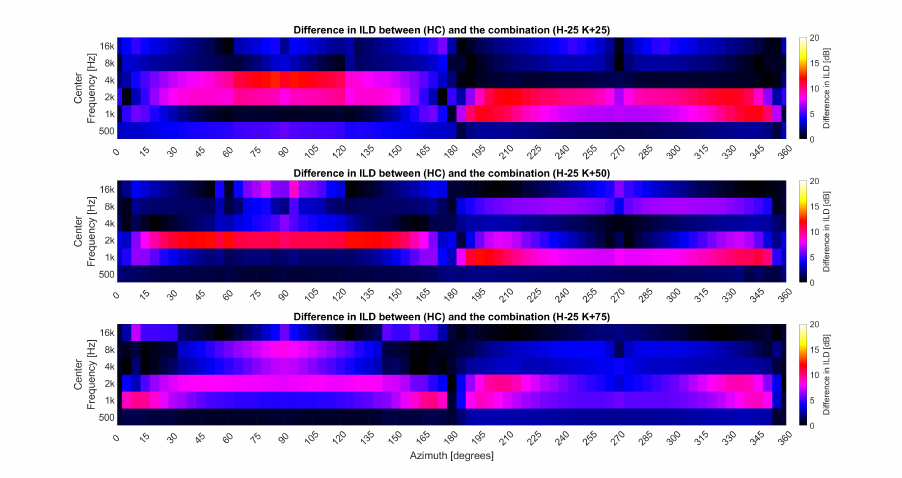

3.3.3.2 Off-center ILD . . . . . . . . . . . . . . . . . . 87

3.4 Discussion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

3.5 Concluding Remarks . . . . . . . . . . . . . . . . . . . . . . . . 95

4 Subjective Effort within Virtualized Sound Scenarios 97

4.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 98

4.2 Methods . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 100

CONTENTS ix

4.2.1 Participants . . . . . . . . . . . . . . . . . . . . . . . . . 102

4.2.2 Stimuli . . . . . . . . . . . . . . . . . . . . . . . . . . . . 102

4.2.3 Apparatus . . . . . . . . . . . . . . . . . . . . . . . . . . 104

4.2.4 Auralization . . . . . . . . . . . . . . . . . . . . . . . . . 107

4.2.5 Procedure . . . . . . . . . . . . . . . . . . . . . . . . . . 110

4.2.6 Questionnaire . . . . . . . . . . . . . . . . . . . . . . . . 112

4.2.7 Statistics . . . . . . . . . . . . . . . . . . . . . . . . . . . 113

4.3 Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 113

4.4 Discussion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 116

4.5 Concluding Remarks . . . . . . . . . . . . . . . . . . . . . . . . 119

5 Iceberg: A Hybrid Auralization Method Focused on Compact

Setups. 120

5.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120

5.2 Iceberg an Hybrid Auralization Method . . . . . . . . . . . . . . 122

5.2.1 Motivation . . . . . . . . . . . . . . . . . . . . . . . . . . 122

5.2.2 Method . . . . . . . . . . . . . . . . . . . . . . . . . . . 123

5.2.2.1 Components . . . . . . . . . . . . . . . . . . . . 124

5.2.2.2 Energy Balance . . . . . . . . . . . . . . . . . . 124

5.2.2.3 Iceberg proposition . . . . . . . . . . . . . . . . 127

5.2.3 Setup Equalization & Calibration: . . . . . . . . . . . . . 133

5.3 System Characterization . . . . . . . . . . . . . . . . . . . . . . 138

5.3.1 Experimental Setup . . . . . . . . . . . . . . . . . . . . . 139

CONTENTS x

5.3.2 Virtualized RIRs & BRIRs . . . . . . . . . . . . . . . . . 139

5.3.3 Conditions . . . . . . . . . . . . . . . . . . . . . . . . . . 142

5.3.4 Reverberation Time . . . . . . . . . . . . . . . . . . . . . 143

5.4 Main Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . 145

5.4.1 Centered Position . . . . . . . . . . . . . . . . . . . . . . 145

5.4.1.1 Interaural Time Difference . . . . . . . . . . . . 145

5.4.1.2 Interaural Level Difference . . . . . . . . . . . . 147

5.4.1.3 Azimuth Estimation . . . . . . . . . . . . . . . 150

5.4.2 Off-Center Positions . . . . . . . . . . . . . . . . . . . . 151

5.4.2.1 Interaural Time Difference . . . . . . . . . . . . 151

5.4.2.2 Interaural Level Difference . . . . . . . . . . . . 153

5.4.2.3 Azimuth Estimation . . . . . . . . . . . . . . . 155

5.4.3 Centered Accompanied by a Second Listener . . . . . . . 156

5.4.3.1 Interaural Time Difference . . . . . . . . . . . . 157

5.4.3.2 Interaural Level Difference . . . . . . . . . . . . 157

5.4.3.3 Azimuth Estimation . . . . . . . . . . . . . . . 159

5.5 Supplementary Test Results . . . . . . . . . . . . . . . . . . . . 163

5.5.1 Centered Position (Aided) . . . . . . . . . . . . . . . . . 164

5.5.1.1 Interaural Time Difference . . . . . . . . . . . . 165

5.5.1.2 Interaural Level Difference . . . . . . . . . . . . 166

5.5.1.3 Azimuth Estimation . . . . . . . . . . . . . . . 166

5.5.2 Off-center Positions (Aided) . . . . . . . . . . . . . . . . 169

CONTENTS xi

5.5.2.1 Interaural Time Difference . . . . . . . . . . . . 169

5.5.2.2 Interaural Level Difference . . . . . . . . . . . . 171

5.5.2.3 Azimuth Estimation . . . . . . . . . . . . . . . 174

5.6 Discussion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 176

5.6.1 Subjective impressions . . . . . . . . . . . . . . . . . . . 180

5.6.2 Advantages and Limitations . . . . . . . . . . . . . . . . 181

5.6.3 Study limitations and Future Work . . . . . . . . . . . . 182

5.7 Concluding Remarks . . . . . . . . . . . . . . . . . . . . . . . . 183

6 Conclusion 184

6.1 Iceberg . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 185

6.2 General Discussion . . . . . . . . . . . . . . . . . . . . . . . . . 187

6.2.1 Iceberg capabilities . . . . . . . . . . . . . . . . . . . . . 189

6.2.2 Iceberg & Second Joint Listener . . . . . . . . . . . . . . 190

6.2.3 Iceberg: Listener Wearing Hearing Aids . . . . . . . . . . 191

6.2.4 Iceberg Limitations . . . . . . . . . . . . . . . . . . . . . 192

6.3 General Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . 193

6.4 Main Contributions . . . . . . . . . . . . . . . . . . . . . . . . . 193

Bibliography 195

Appendices 225

A ITDs Ambisonics 225

B Delta ILD Ambisonics 226

C Wave Equation and Spherical Harmonic Representation 228

C.1 Wave Equation in Spherical Coordinates . . . . . . . . . . . . . 228

C.2 Separation of the Variables . . . . . . . . . . . . . . . . . . . . . 228

C.3 Spherical Harmonics . . . . . . . . . . . . . . . . . . . . . . . . 230

D Reverberation time in Acoustic Simulation 231

E Alpha Coefficients 232

F Questionnaire 234

xii

List of Tables

2.1 Non-exhaustive overview list of hybrid auralization methods

proposed in the literature. The A-B order of the techniques

does not represent any order of significance. . . . . . . . . . . . 45

2.2 Overview of Localization Error Estimates or Measurements from

Loudspeaker-Based Virtualization Systems Using Various Au-

ralization Methods. . . . . . . . . . . . . . . . . . . . . . . . . . 49

3.1 Sound pressure level difference between direct sound and early

reflections ∆ SPL [dB] . . . . . . . . . . . . . . . . . . . . . . . 62

4.1 The questionnaire for subjective ratings of performance, effort

and engagement (English translation from Danish) . . . . . . . 113

4.2 Results of linear mixed model based on SNR and RT predictors

estimates of the questionnaire. . . . . . . . . . . . . . . . . . . . 114

4.3 Pearson skipped correlations between performance and self-reported

questions. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 116

5.1 Reverberation Time in three virtualized environments . . . . . . 144

5.2 One way anova, columns are absolute difference between esti-

mated and reference angles for different KEMAR positions and

RTs. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 162

5.3 Hearing Level in dB according to the proposed Standard Au-

diograms . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 164

5.4 One way anova, columns are absolute difference between esti-

mated and reference angles for different positions and RTs. . . . 168

xiii

List of Figures

2.1 Two-dimensional representation of the cone of confusion. . . . . 11

2.2 A descriptive definition of the measured free-field HRTF for a

given angle. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

2.3 Polar coordinate system related to head incidence angles . . . . 13

2.4 Head-related transfer functions of four human test participants,

frontal incidence . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

2.5 Audible effects of a single reflection . . . . . . . . . . . . . . . . 16

2.6 Binaural reproduction setups . . . . . . . . . . . . . . . . . . . . 23

2.7 Vector-based amplitude panning: 2D display of sound sources

positions and weights. . . . . . . . . . . . . . . . . . . . . . . . 25

2.8 Diagram representing the placement of speakers in the VBAP

technique . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

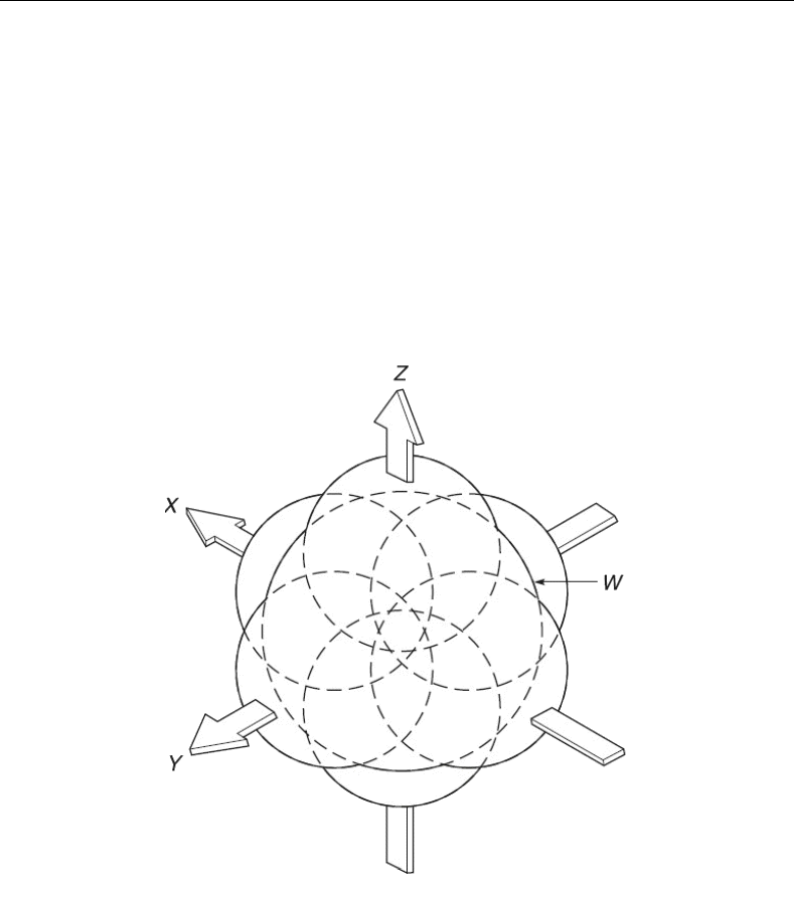

2.9 Spherical Harmonics Y

m

n

(θ, ϕ). . . . . . . . . . . . . . . . . . . . 29

2.10 B-format components: omnidirectional pressure component W,

and the three velocity components X, Y, Z. . . . . . . . . . . . . 30

2.11 Illustration of Huygen’s Principle of a propagating wave front. . 32

2.12 Normalized Room Impulse Response: example from a real room

in the time domain (left), and in the time domain in dB (right). 35

2.13 LoRa implementation processing diagram . . . . . . . . . . . . . 43

3.1 Hearing Sciences - Scottish Section Test Room. . . . . . . . . . 59

xv

LIST OF FIGURES xvi

3.2 Eriksholm Test Room. . . . . . . . . . . . . . . . . . . . . . . . 59

3.3 Reverberation time in third of octave . . . . . . . . . . . . . . . 61

3.4 HATS and KEMAR inside test room in Glasgow . . . . . . . . . 63

3.5 HATS and KEMAR inside Eriksholm’s Anechoic Room . . . . 63

3.6 Description of experiment’s measured positions and mannequin

placement . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65

3.7 Interaural cross correlation - Frontal angle . . . . . . . . . . . . 72

3.8 Polar representation IACC . . . . . . . . . . . . . . . . . . . . . 72

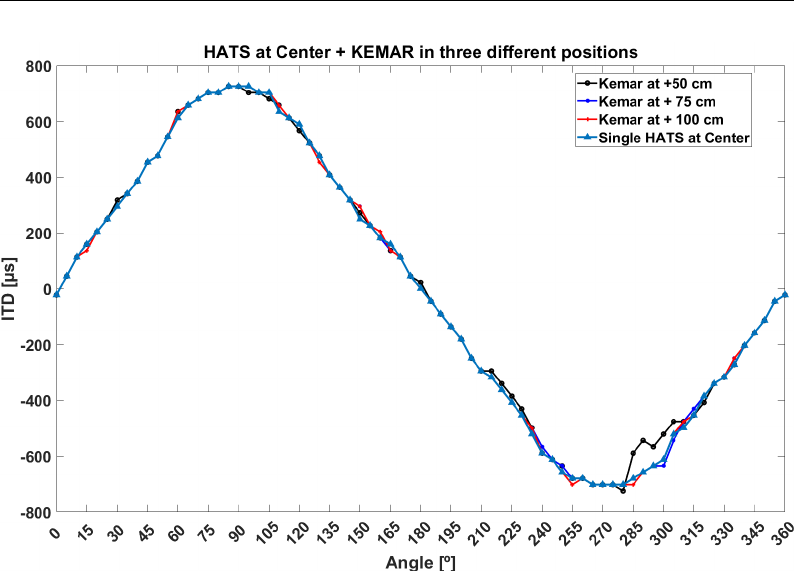

3.9 Interaural Time Difference by angle: VBAP accompanied . . . . 74

3.10 Ambisonics - directivity representation in 2D . . . . . . . . . . 75

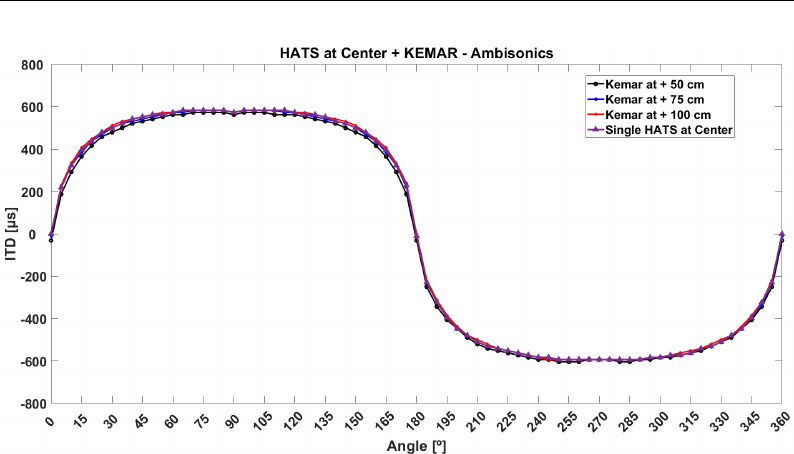

3.11 Interaural Time Difference by angle: Ambisoncis accompanied . 76

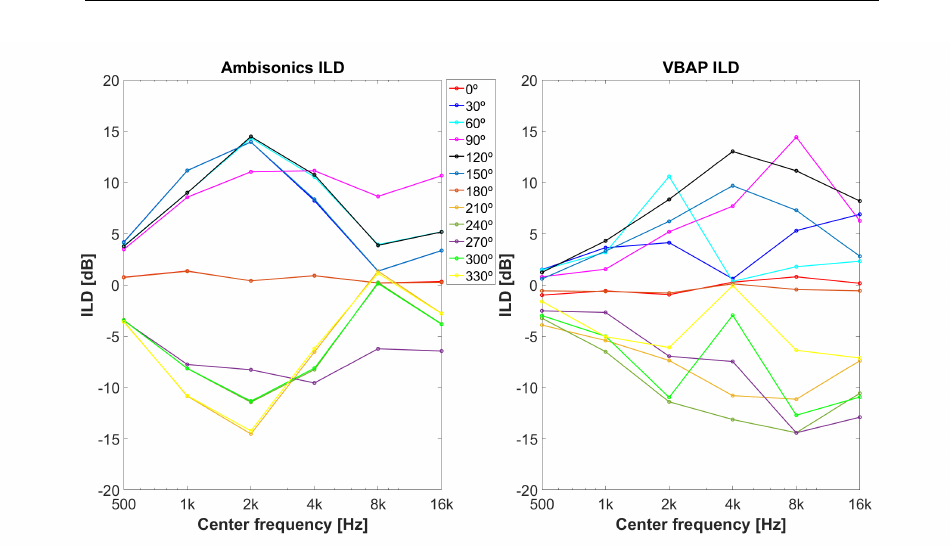

3.12 Interaural Level Differences: VBAP and Ambisonics . . . . . . . 77

3.13 Interaural Level Differences: averaged octave bands as a func-

tion of azimuth angle for a HATS Br¨uel and Kjær TYPE 4128-C

in the horizontal plane. . . . . . . . . . . . . . . . . . . . . . . . 78

3.14 Interaural Level Differences with additional listener (VBAP and

Ambisonics) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 78

3.15 Discrepancies in Interaural Level Differences (VBAP) . . . . . . 79

3.16 VBAP Interaural Level Differences as function of azimuth angle

around the centered listener. . . . . . . . . . . . . . . . . . . . . 80

3.17 Discrepancies in Interaural Level Differences: Ambisonics . . . . 81

3.18 Ambisonics Interaural Level Differences as function of azimuth

angle around the centered listener. . . . . . . . . . . . . . . . . 82

3.19 VBAP Off center ITD HATS 25 cm . . . . . . . . . . . . . . . . 84

3.20 VBAP Off center ITD HATS 50 cm . . . . . . . . . . . . . . . . 84

3.21 VBAP Off center ITD HATS 75 cm . . . . . . . . . . . . . . . . 85

LIST OF FIGURES xvii

3.22 VBAP Off center ITD displaced HATS . . . . . . . . . . . . . . 85

3.23 VBAP ITD considering real sound sources only . . . . . . . . . 86

3.24 Ambisonics ITD as a function of source angle . . . . . . . . . . 87

3.25 ILD (VBAP and Ambisonics) in off-center setups . . . . . . . . 88

3.26 ILD centered setup and off-center VBAP setups . . . . . . . . . 89

3.27 Differences in the ILD between centered and off-center (25 cm)

in VBAP setups . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

3.28 Differences in the ILD between centered and off-center (50 cm)

in VBAP setups . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

3.29 Differences in the ILD between centered and off-center (75 cm)

in VBAP setups . . . . . . . . . . . . . . . . . . . . . . . . . . . 91

3.30 ILD centered setup and off-center Ambisonics setups . . . . . . 92

4.1 Auralization procedure implemented to create mixed audible

HINT sentences with 4 spatially separated talkers at the sides

and back (maskers) and one target in front. . . . . . . . . . . . 104

4.2 Spatial setup of the experiment . . . . . . . . . . . . . . . . . . 105

4.3 Eriksholm Anechoic Room: Reverberation Time . . . . . . . . . 105

4.4 Eriksholm Anechoic Room: Background noise . . . . . . . . . . 106

4.5 Experiment setup placed inside anechoic room. . . . . . . . . . . 106

4.6 Overall reverberation time (RT) as a function of receptor (head)

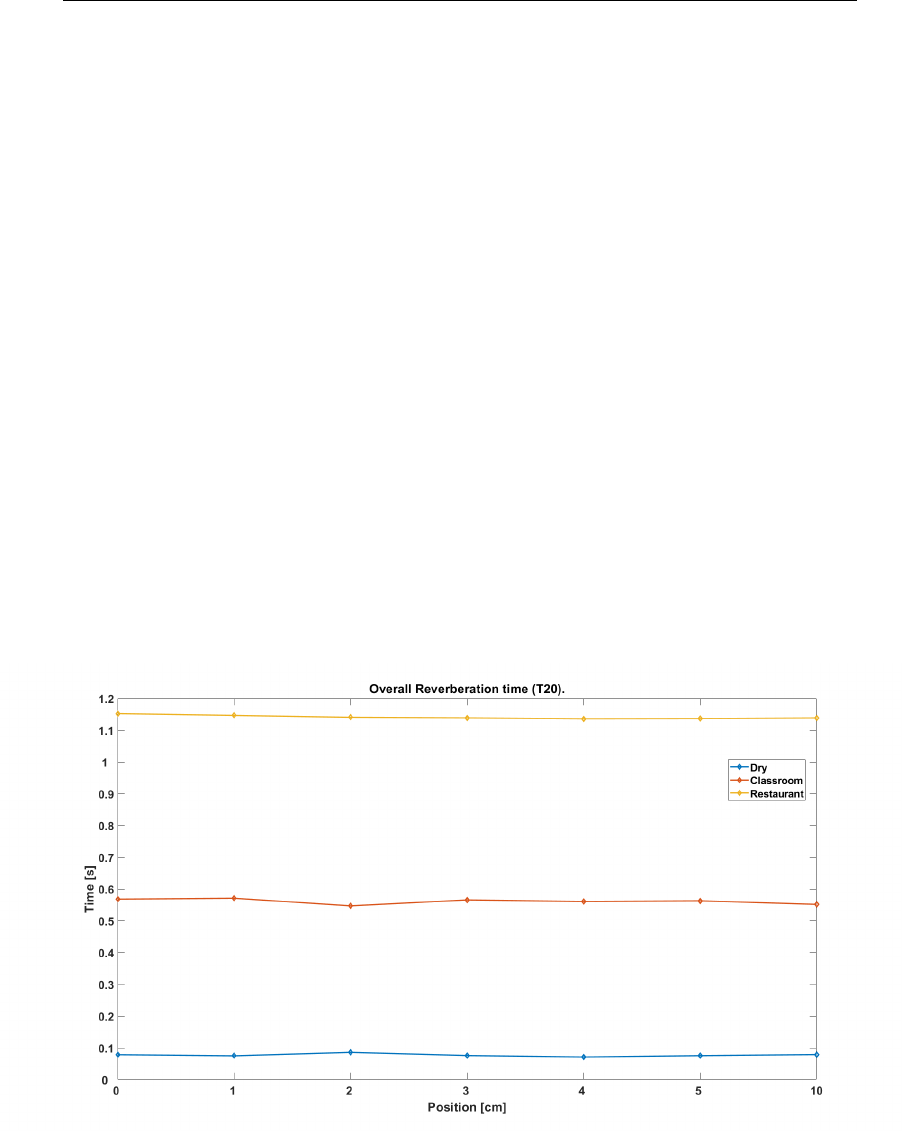

position in the mid-saggital plane re center (0 cm) . . . . . . . . 108

4.7 Sound pressure level Ambisonics virtualized setup . . . . . . . . 109

4.8 Participant positioned to the test. . . . . . . . . . . . . . . . . . 110

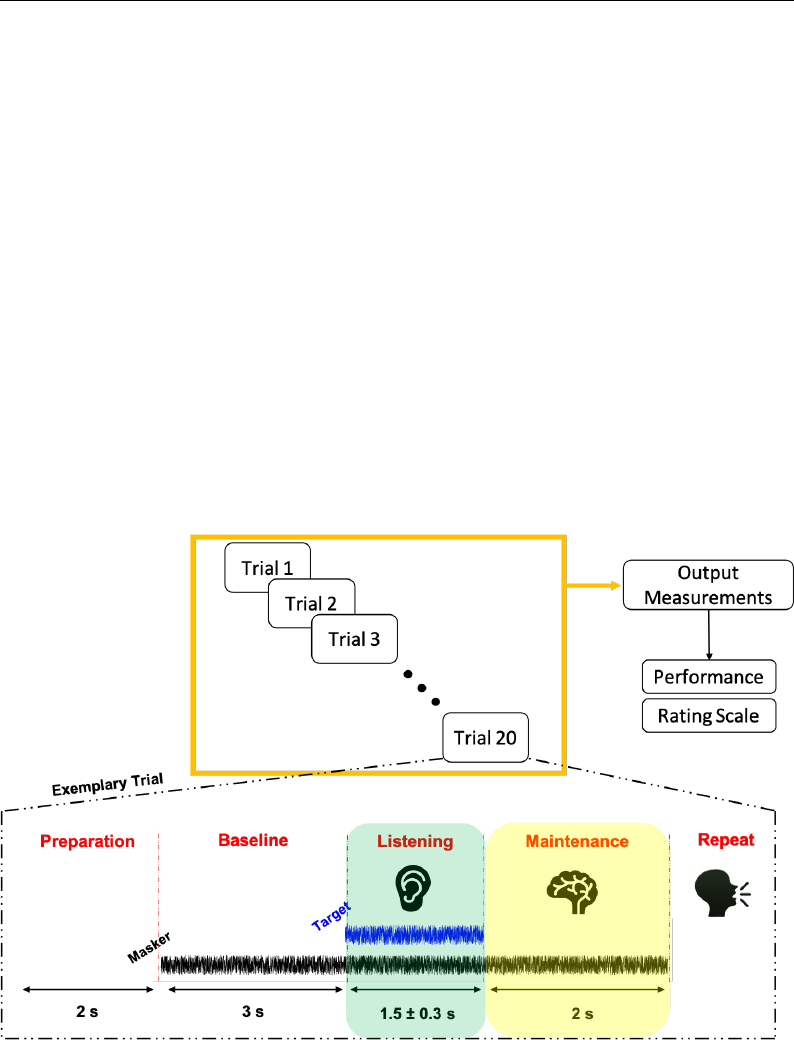

4.9 Experiment’s trial design . . . . . . . . . . . . . . . . . . . . . . 111

4.10 Graphic User Interface . . . . . . . . . . . . . . . . . . . . . . . 112

LIST OF FIGURES xviii

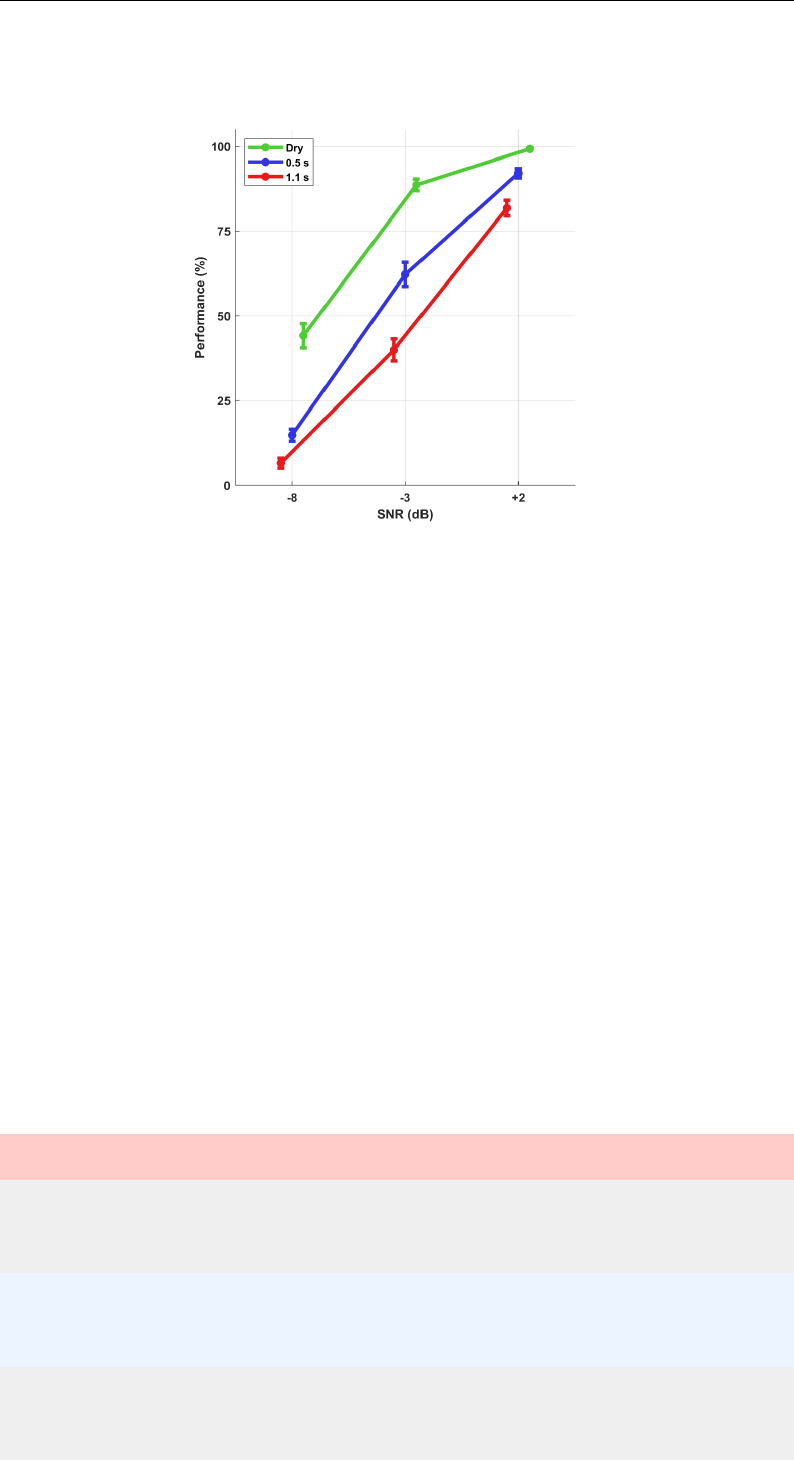

4.11 Performance accuracy (word-scoring) . . . . . . . . . . . . . . . 114

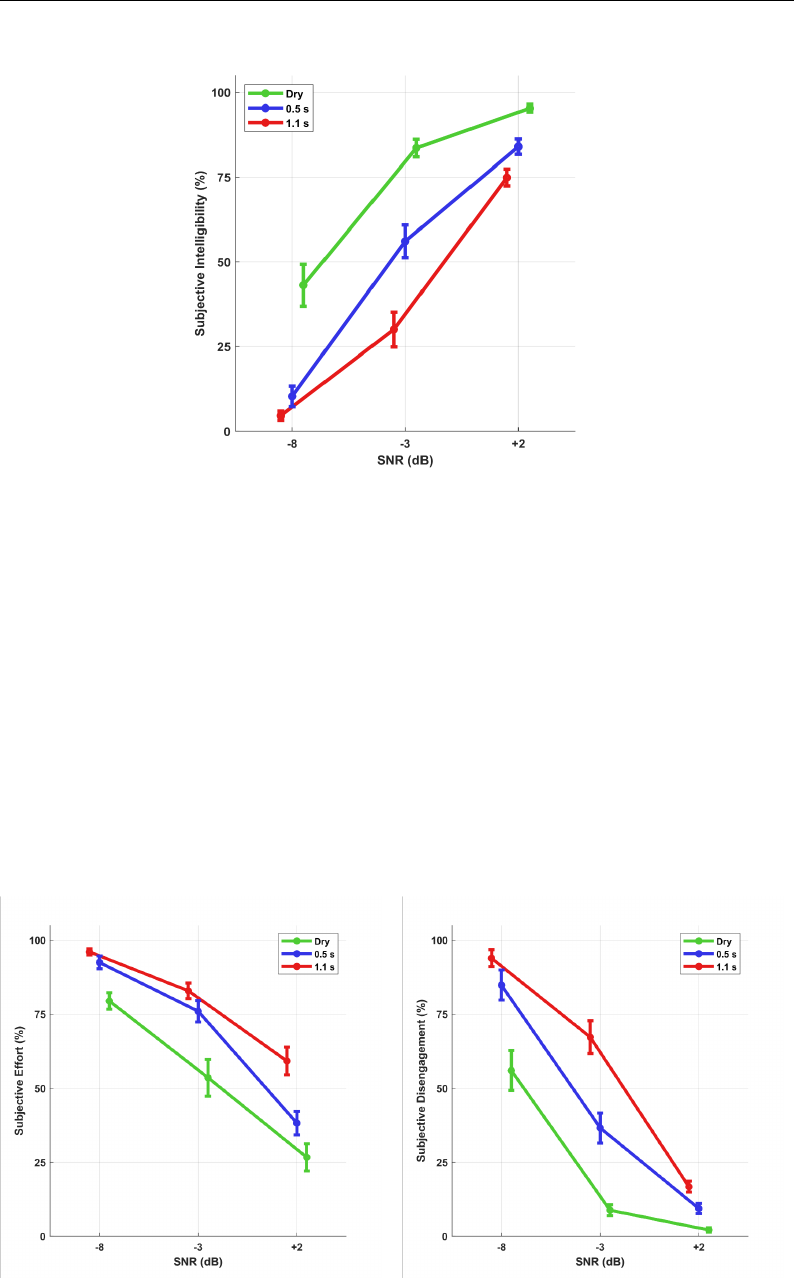

4.12 Self reported subjective intelligibility . . . . . . . . . . . . . . . 115

4.13 Self reported subjective effort . . . . . . . . . . . . . . . . . . . 115

4.14 Self reported subjective disengagement . . . . . . . . . . . . . . 115

5.1 Top view. Loudspeakers position on horizontal plane to virtu-

alization with proposed Iceberg method. . . . . . . . . . . . . . 123

5.2 Normalized Ambisonics first-order RIR generated via ODEON

software. Left panel depicts the waveform; right panel depicts

the waveform in dB. . . . . . . . . . . . . . . . . . . . . . . . . 125

5.3 Reflectogram split into Direct Sound Early and Late Reflections. 126

5.4 Iceberg’s processing Block diagram. The Ambisonics RIR is

treated, split, and convolved to an input signal. A virtual audi-

tory scene can be created by playing the multi-channel output

signal with the appropriate setup.. . . . . . . . . . . . . . . . . 129

5.5 Omnidirectional channel of Ambisonics RIR for a simulated room.129

5.6 RIR Ambisonics segments . . . . . . . . . . . . . . . . . . . . . 130

5.7 Example of signal auralized with the Iceberg method . . . . . . 132

5.8 Loudspeaker frequency response comparison . . . . . . . . . . . 135

5.9 Loudspeakers normalized frequency response . . . . . . . . . . . 135

5.10 Loudspeakers normalized frequency response filtered . . . . . . . 136

5.11 BRIR/RIR acquisition flowchart: Iceberg auralization method. . 141

5.12 BRIR measurement setup: B&K HATS and KEMAR positioned

inside the anechoic room. . . . . . . . . . . . . . . . . . . . . . . 141

5.13 Measurement positions (grid) . . . . . . . . . . . . . . . . . . . 142

5.14 RT within Iceberg virtualized environment . . . . . . . . . . . . 144

5.15 Iceberg Centered Interaural Time Difference . . . . . . . . . . . 145

LIST OF FIGURES xix

5.16 Iceberg Centered Interaural Time Difference RTs = 0, 0.5 and

1.1 s . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 146

5.17 Iceberg centered ILD . . . . . . . . . . . . . . . . . . . . . . . . 147

5.18 Iceberg and Real loudspeakers ILDs as a function of azimuth

angle . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 148

5.19 Iceberg Heatmap Absolute ∆ ILD . . . . . . . . . . . . . . . . 148

5.20 Iceberg: Heatmap Absolute ∆ ILD minus JND . . . . . . . . . . 149

5.21 Iceberg method: Estimated azimuth angle . . . . . . . . . . . . 150

5.22 Iceberg ITD Frontal displacement . . . . . . . . . . . . . . . . . 152

5.23 Iceberg ITD Frontal and lateral displacement . . . . . . . . . . 152

5.24 Delta Interaural Level Differences RT = 0.0 s . . . . . . . . . . 153

5.25 Delta Interaural Level Differences RT = 0.5 s . . . . . . . . . . 154

5.26 Delta Interaural Level Differences RT = 1.1 s . . . . . . . . . . 155

5.27 Estimated (model by May and Kohlrausch [182]) frontal az-

imuth angle at different positions inside the loudspeaker ring as

function of the target angle. . . . . . . . . . . . . . . . . . . . . 156

5.28 ITD with second listener present . . . . . . . . . . . . . . . . . 157

5.29 Delta Interaural Level Differences Centered+Second Listener . . 158

5.30 Estimated localization error with presence of a second listener . 160

5.31 Difference to target in estimated localization with presence of a

second listener . . . . . . . . . . . . . . . . . . . . . . . . . . . . 161

5.32 Estimated error to RT=0 considering the estimation of real

loudspeakers as basis. . . . . . . . . . . . . . . . . . . . . . . . . 162

5.33 HATS wearing a Oticon Hearing Device (right ear) . . . . . . . 164

5.34 Interaural Time Difference Iceberg method (aided) . . . . . . . . 165

5.35 Iceberg method ILD (aided condition) . . . . . . . . . . . . . . 166

5.36 Azimuth angle estimation (aided condition) . . . . . . . . . . . 167

5.37 Absolute difference in estimated azimuth angle (aided condition) 168

5.38 Tukey test to compare means aided condition. . . . . . . . . . . 169

5.39 Iceberg method off center ITD (aided condition) . . . . . . . . . 170

5.40 Delta Interaural Level Differences Aided RT = 0.0 s . . . . . . . 172

5.41 Delta Interaural Level Differences Aided RT = 0.5 s . . . . . . . 172

5.42 Delta Interaural Level Differences Aided RT = 1.1 s . . . . . . . 173

5.43 Estimated frontal azimuth angle on different positions (aided

condition) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 174

A.1 ITD as a function of source angle Ambisonics . . . . . . . . . . 225

B.1 Differences in the ILD Ambisonics, 25 cm . . . . . . . . . . . . . 226

B.2 Differences in the ILD Ambisonics, 50 cm . . . . . . . . . . . . . 227

B.3 Differences in the ILD Ambisonics, 75 cm . . . . . . . . . . . . . 227

D.1 Reverberation time (a) Classroom (b) Restaurant . . . . . . . . 231

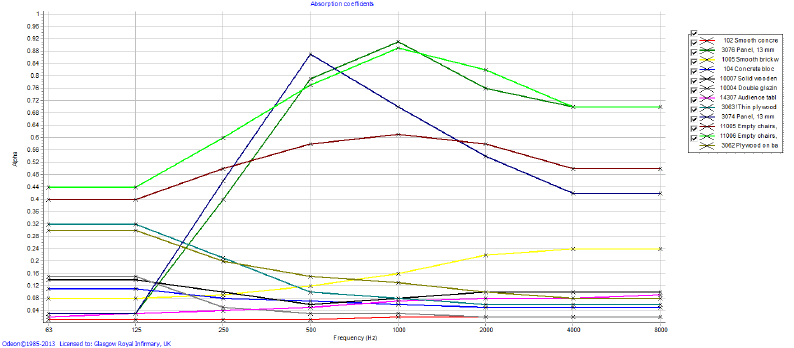

E.1 Classroom alpha coefficients . . . . . . . . . . . . . . . . . . . . 232

E.2 Restaurant alpha coefficients . . . . . . . . . . . . . . . . . . . . 233

E.3 Anechoic alpha coefficients . . . . . . . . . . . . . . . . . . . . . 233

xx

Nomenclature

General Symbols

C

50

Clarity: the ratio between the first 50 ms of the RIR and from 50 ms

to the end, Eq. (2.12), page 36.

C

80

Clarity: the ratio between first 80 ms of the RIR and RIR from 80 ms

to the end, Eq. (2.12), page 36.

D

50

Clarity: ratio between first RIR 50 ms of a RIR and the complete

RIR, Eq. (2.14), page 37.

D

80

Clarity: ratio between first 80 ms of a RIR and the the complete

RIR, Eq. (2.14), page 37.

g Gain matrix, page 25.

h(t) Impulse response energy in time domain, Eq. (2.10), page 35.

h

b

(t) RIR measured with a pressure gradient microphone, Eq. (2.16), page 38.

h

L

(t) Impulse responses collected from the left ear, page 39.

h

R

(t) Impulse responses collected from the right ear, page 39.

l

1

Vector from center point to channel 1, Eq. (2.3), page 25.

l

2

Vector from center point to channel 2, Eq. (2.3), page 25.

L

12

Speaker Position Matrix (Channels), page 25.

m Ambisonics components order, Eq. (2.9), page 30.

N number of necessary sources to Ambisonics reproduction, Eq. (2.9),

page 30.

p Vector from center point to virtual font, Eq. (2.3), page 25.

xxi

p p-value for the t-statistic of the hypothesis test that the correspond-

ing coefficient is equal to zero or not., page 114.

p

n

L

(t) Bandpassed left impulse response, Eq. (3.7), page 71.

p

n

R

(t) Bandpassed right impulse response, Eq. (3.7), page 71.

p

L

(t) Impulse response at the entrance of the left ear canal, Eq. (3.5),

page 70.

p

R

(t) Impulse response at the entrance of the right ear canal, Eq. (3.5),

page 70.

RT Reverberation time, page 34.

RT

60

Reverberation time, page 34.

s(t) Arbitrary sound source signal, page 29.

S

l

(t) Time signal recorded with the set microphone and the loudspeaker

l, page 66.

SE Standard error of the coefficients, page 114.

T

20

Reverberation Time (T

60

) extrapolated from 25 dB of energy decay,

Eq. (2.10), page 35.

T

30

Reverberation Time (T

60

) extrapolated from 35 dB of energy decay,

Eq. (2.10), page 35.

t Time, page 12.

t

s

Center Time, Eq. (2.15), page 37.

T

60

Reverberation Time, Eq. (2.10), page 34.

v(t)

1kHz

Sinusoidal 1 k Hz signal recorded from the calibrator in VFS, Eq. (3.2),

page 66.

V Volume of the room, Eq. (2.10), page 34.

v

l

(t) Calibrator signal recorded in the left ear, Eq. (3.1), page 65.

v

r

(t) Calibrator signal recorded in the right ear, Eq. (3.1), page 65.

Greek Symbols

α

l,rms

Calibration factor for the left ear, Eq. (3.1), page 65.

xxii

α

r,rms

Calibration factor for the right ear, Eq. (3.1), page 65.

¯α Averaged Absorption Coefficient, Eq. (2.10), page 34.

Γ

l

Level factor to the loudspeaker l, Eq. (3.3), page 66.

ω Angular frequency, page 12.

ϕ Elevation angle related to the ears axis of the listener, page 29.

θ Azimuthal angle related to the ears axis of the listener, page 29.

Mathematical Operators and Conventions

β Fixed-effects regression coefficient, page 114.

e Exponential function, where e

(1)

≈ 2, 7182, page 12.

R

Integral, page 12.

j

√

−1, imaginary operator, page 12.

τ Time delay, Eq. (2.18), page 39.

t

x

t-statistic for each coefficient to test the null hypothesis, page 114.

Y

m

n

(θ, ϕ) Spherical harmonics function of order n and degree m, Eq. (2.7),

page 29.

L

eq

Equivalent continuous sound level, page 103.

max() Function that returns the element with the maximum value for a

sequence of numbers, or for a vector, Eq. (2.18), page 39.

RMS() Root mean square, Eq. (3.2), page 66.

Acronyms and Abbreviations

2D Two-dimensions in space, page 24.

3D Three-dimensions in space, page 24.

vs. From Latin Versus is the past participle of vertare. which means

“against” and “as opposed or compared to., page 81.

AD/DA Analog-to-Digital Digital-to-Analog converter, page 59.

AR Augmented reality, page 27.

xxiii

ASW Apparent Source Width, page 38.

BRIR Binaural room impulse response, page 15.

CTC Cross-talk cancellation, page 23.

dB HL Hearing Loss in decibels, page 102.

DBAP Distance-Based Amplitude Panning, page 47.

DS Direct sound, page 15.

EcoEG Combination study number 3: Eco (Reverberation/Ecological) and

EEG, page 97.

EEG Electroencephalogram, page 50.

FFRs Brainstem frequency responses, page 50.

FFT Fast Fourier transform, page 70.

FIR Finite Impulse Response, page 71.

HATS Head and torso simulator, page 60.

HC B& K 4128 HATS at center position, page 79.

HC

K+X

B& K 4128 HATS at center position and KEMAR at X cm to the

left, page 80.

HC K-

X

B& K 4128 HATS at center position and KEMAR at X cm to the

right, page 79.

HEAR-

ECO

Innovative Hearing Aid Research – Ecological Conditions and Out-

come Measures, page 97.

HINT Hearing in Noise Test, page 103.

HOA Higher Order Ambisonics, page 31.

HRTF Head-Related Transfer Function, page 12.

IACC Interaural Cross-Correlation Coefficient, page 39.

IACF Interaural cross-correlation function, Eq. (3.5), page 70.

ILD Interaural Level Difference, page 10.

IPD Interaural Phase Difference, page 10.

xxiv

ITD Interaural Time Difference, page 10.

ITF Interaural Transfer Function, page 14.

JND Just noticeable difference, page 93.

KEMAR Knowles Electronics Manikin for Acoustic Research, page 60.

LEF Lateral Energy Fraction, Eq. (2.16), page 38.

LEV Listener Envelopment , page 39.

LG Lateral Strength, Eq. (2.17), page 39.

LMM Linear Mixed-effect Model, page 113.

LPF Low-pass filter, page 73.

LTI Linear and Time-Invariant System, page 33.

MDAP Multiple-Direction Amplitude Panning, page 47.

MOA Mixed Order Ambisonics, page 52.

MTF Monaural Transfer Function, page 13.

NSP Nearest Speaker, page 52.

PLE Perceptual Localization Error, page 41.

PTA4 Four bands pure tone audiometry, page 102.

RIRs Room impulse response, page 15.

SH Spherical Harmonics, page 28.

SPL Sound pressure level, page 35.

SRT Speech Reception Threshold, page 52.

VBAP Vector-Based Amplitude Panning, page 23.

VBIP Vector-Based Intensity Panning, page 41.

VFS Volts full scale, page 66.

VSE Virtual Sound Environment, page 18.

W Omnidirectional channel, Eq. (2.9), page 29.

WFS Wave Field Synthesis, page 31.

xxv

Chapter 1

Introduction

Individuals with normal hearing often can effortlessly comprehend complex

listening scenarios involving multiple sound sources, background noise, and

echoes [226]. However, those with hearing loss may find these situations par-

ticularly challenging [273, 289, 304, 317]. These environments are commonly

encountered in daily life, particularly during social events. They can negatively

impact the communication abilities of individuals with hearing loss [137, 260].

The difficulties associated with understanding complex listening scenarios can

be a significant barrier for individuals with hearing loss, leading to reduced

participation in social activities [16, 63, 119].

1.1 Motivations

Several hearing research laboratories worldwide are developing systems to re-

alistically simulate challenging scenarios through virtualization to better un-

derstand and help with these everyday challenges in people’s lives [41, 79,

102, 116, 118, 160, 161, 188, 195, 218–220, 259, 272, 298] The virtualization

of sound sources is a powerful tool for auditory research capable of achieving

1

Chapter 1. Aims and Scope 2

a high level of detail, but current methods use expensive, expansive technol-

ogy [293]. In this work, a new auralization method has been developed to

achieve sound spatialization with a reduction in the technological hardware

requirement, making virtualization at the clinic level possible.

1.2 Aims and Scope

Overall the objective of the research was to investigate parameters of sound

virtualization methods related to its localization accuracy, especially the per-

ceptually based ones [39], in their optimal but also in challenging conditions.

Furthermore, an auralization method oriented to a smaller setup to reduce the

hardware requirements is proposed.

The specific objectives were:

• To investigate spatial distortions through binaural cue differences in two

well-known virtualization setups (Vector-Based Amplitude Panning and

Ambisonics (VBAP)).

• To investigate the influence of a second listener inside the sound field

(VBAP and Ambisonics).

• To evaluate the feasibility of a speech-in-noise test within Ambisonics

virtualized reverberant rooms.

• To study the relation between reverberation, signal-to-noise ratio (SNR),

and listening effort in environments virtualized in first-order Ambisonics.

• To investigate the binaural cues, objective level and reverberation time

for a new auralization method utilizing four loudspeakers.

Chapter 1. Contributions 3

• To investigate the influence of hearing aids on binaural cues and objec-

tive parameters within virtualized scenes utilizing the new auralization

method with an appropriate setup.

The main objective of this research was to examine various parameters of sound

virtualization methods related to their localization accuracy, with a focus on

perceptually-based methods [39], in optimal and challenging conditions. Addi-

tionally, a new auralization method was proposed for a smaller setup to reduce

hardware requirements. The specific goals of the research included:

• Examining spatial distortions through differences in binaural cues in two

well-known virtualization setups (Vector-Based Amplitude Panning and

Ambisonics (VBAP)).

• Evaluating a second listener’s impact within the sound field (VBAP and

Ambisonics).

• Assessing the feasibility of a speech-in-noise test within Ambisonics vir-

tualized reverberant rooms.

• Investigating the relationship between reverberation, signal-to-noise ratio

(SNR), and listening effort in environments virtualized using first-order

Ambisonics.

• Using four loudspeakers, propose an auralization method, measure it,

analyze objective parameters against existent methods.

• Test and analyze the influence of acquiring signals with hearing aids

microphones on virtualized scenes using the new auralization method

with a four-loudspeaker virtualization setup.

Chapter 1. Contributions 4

1.3 Contributions

The main contribution of this research to the scientific field of auditory per-

ception is the development of a new auralization method that addresses the

current gap in the virtualization of sound sources using a small number of

loudspeakers. Specifically, this method aims to achieve both good localization

accuracy and a high level of immersion simultaneously, which has been a chal-

lenge in previous approaches. Furthermore, the proposed method combines

existing techniques. It can be implemented using readily available hardware,

requiring a minimum of four loudspeakers. This technology makes it more

accessible for audiologists and researchers to create realistic listening scenarios

for patients and participants while reducing the technical resources required for

implementation. Overall, this work represents a valuable contribution to the

field of auditory perception and has the potential to advance the understanding

of spatial hearing and the development of effective hearing solutions.

1.4 Organization of the Thesis

In Chapter 2, a review examines previous work carried out in several dif-

ferent areas concerning virtualization and the auralization of sound sources.

The chapter starts with an overview of the basic concepts of human sound

perception. Next, virtual acoustics are explored, reviewing the generation of

virtual acoustic environments using different rendering paradigms and meth-

ods. In addition, relevant room acoustics concepts and objective parameters,

and their relation to hearing perception, are described. Finally, the review

considers auralization and virtualization as applied to auditory research. This

review stresses the importance of virtual sound sources for greater realism

and ecological validity in auditory research and the challenges of adequately

Chapter 1. Organization of the Thesis 5

creating a virtual environment focused on auditory research.

Chapter 3 presents an investigation of binaural cue distortions in imperfect

setups. First, the methods are described, including the complete auralization

of signals using two different methods and the system’s calibration. The inves-

tigation first compares both auralization methods through the same calibrated

virtualization setup in terms of spatial distortions. Then the spatial cues are

examined with the addition of a second listener to the virtualized sound field.

Both investigations are performed with the primary listener on and off-center.

In Chapter 4, a behavioral study examines subjective effort within virtualized

sound scenarios. As the study was part of a collaborative project, only one

auralization method was selected, first-order Ambisonics. The aim was to ex-

amine how SNR and reverberation combine to affect effort in a speech-in-noise

task. Also, the feasibility of using first-order Ambisonics was examined. How-

ever, the sound sources were well separated in space, and localization accuracy

was not a factor. An important aspect of the study was an auralization issue

involving head movement observed during pilot data collection. This issue

led to a solution that allowed the study to continue. The results verified the

relationships between subjective effort and acoustic demand. Furthermore,

this issue led to the further investigation of the effect of off-center listening,

considered in both Chapter 3 and Chapter 5.

In Chapter 5, a hybrid method of auralization is proposed combining the meth-

ods examined and used in previous chapters: VBAP and Ambisonics. This

method was designed to allow auralized signals to be virtualized in a small

reproduction system, thus providing better accessibility to research within the

virtualized sound field in clinics and research centers that do not have a size-

able acoustic apparatus. The hybrid auralization method aims to unite the

strengths of both techniques: localization by VBAP and immersion by Am-

Chapter 1. Organization of the Thesis 6

bisonics. Both of these psychoacoustic strengths are related to the room’s im-

pulse response. The hybrid method convolves the desired signal with distinct

parts of an Ambisonics-format impulse response that characterizes the desired

environment. The potential for generating auralizations for a reproduction

system with at least four loudspeakers is demonstrated. The virtualization

system was tested with three different scenarios. Parameters relevant to the

perception of a scene, such as reverberation time, sound pressure level, and

binaural cues, were evaluated in different positions within the speaker arrange-

ment. The effects of a second participant inside the ring were also investigated.

The evaluated parameters were as expected, with the listener in the system’s

center (sweet spot). However, deviations and issues at specific presentation

angles were identified that could be improved in future implementations. Such

errors also need to be further investigated as to their influence on the subjec-

tive perception of the scenario, which was not performed due to the COVID-19

pandemic. An alternative robustness assessment was performed offline, exam-

ining the localization accuracy with a model proposed by May et al. [182] The

method also proved effective for tests with hearing aids for listeners positioned

in the center of the speaker arrangement. However, the method performance

considering hearing instruments with compression algorithms and advanced

signal processing still needs to be verified.

Chapter 6 presents a general discussion of the feasibility of applying tests

using the proposed method and an overview of the processes. In addition, the

relevant contributions of the work are presented, as are the limitations and the

suggestions for further improvements.

Chapter 2

Literature Review

2.1 Introduction

The field of audiology is concerned with the study of hearing and hearing dis-

orders, as well as the assessment and rehabilitation of individuals with hearing

loss [110]. In this review chapter, we will explore various topics related to

human binaural hearing, spatial sound, and virtual acoustics to provide a

comprehensive overview of the current state of knowledge in these fields and

highlight their important contributions to our understanding of hearing and

auditory perception. First, we will delve into the intricacies of human binaural

hearing. Next, we will examine the concepts of spatial hearing, including the

various binaural and monoaural cues that contribute to our ability to local-

ize sound in space. We will also explore the head-related transfer function,

which describes the way that sounds are filtered as they travel from their

source to the ear drum, as well as the subjective aspects of audible reflections.

Next, we will turn our attention to spatial sound and virtual acoustics. We

will discuss the virtualization of sound, including the various methods used

to achieve this, such as auralization and virtual sound reproduction. We will

7

Chapter 2. Human Binaural Hearing 8

also examine the different auralization paradigms used in auditory research,

including binaural, panorama, vector-based amplitude panning, ambisonics,

and sound field synthesis. We will then examine the role of room acoustics in

virtualization, and auditory research, including the various parameters, used

to describe room acoustics, such as reverberation time, clarity and definition,

center time, and parameters related to spatiality. Finally, we will explore the

use of loudspeaker-based virtualization in auditory research, including hybrid

methods and sound source localization, as well as the assessment of listening

effort.

2.2 Human Binaural Hearing

The engineering side of the listening process can be simplified modeled through

two input blocks separated in space [92]. These inputs, frequency, and level

are limited and are followed by a signal processing chain that relates the

medium transformations for the wave propagation from air to fluid and elec-

trical pulses [315].

Although this block modeling can be reasonably accurate for educational pur-

poses, it falls short of capturing the true effect and importance of listening

on our essence as human beings. The ability to feel and interpret the world

through the sense of hearing, and to attribute meaning to sound events, enables

humans to enrich their tangible world [56, 244]. For instance, a characteristic

sound can evoke memories or trigger an alert [128]. A piece of music can bring

tears to one’s eyes or persuade someone to purchase more cereal [13, 114]. A

person’s voice can activate certain facial nerves, turning hidden teeth into a

smile. These are some of the reasons why researchers and clinicians dedicate

their lives to understanding the transformation of sound events into auditory

events, with a scientific dedication focused on creating solutions and opening

Chapter 2. Human Binaural Hearing 9

opportunities for more people to experience the sound they love and deserve -

a dedication focused on people and their needs.

As the auditory system comprises two sensors, normal-hearing listeners can

experience the benefits of comparing sounds autonomously, relating them to

the space around them [21]. This constant signal comparison is the main

principle of binaural hearing, where the differences between these sounds allow

for the identification of the direction of a sound event, as well as the sensation

of sound spatiality [9, 40]. Usually, these signals are assumed to be part of a

linear and time-invariant system, which helps to study how humans interpret

the information present in the different signals across the time and frequency

domains. However, this assumption of linearity can fail when analyzing fast

sound sources, reflective surfaces, or sound propagating through disturbed

air [200, 255]. Nonetheless, the advantages of quantifying and capturing the

effect have led to significant progress in hearing sciences.

2.2.1 Spatial Hearing Concepts

Identifying the direction of incidence of a sound source based on the audible

waves received by the listener is defined as an act or process of human sound

localization [285]. For research in acoustics, it is relevant to acknowledge that

the receiver is, in general, a human being. The human hearing mechanism’s

main anatomical characteristic is the binaural system. There are two signal

reception points (external ears positioned on opposite sides of the head). Al-

beit, the whole set (torso, head, hearing pavilions) can also modify the signal

that reaches the two tympanic membranes at some extent [153, 216]. Hu-

man binaural hearing and associated effects have been extensively reported by

Blauert [38].

Chapter 2. Human Binaural Hearing 10

In addition to analyzing sound sources’ spatial location, the central auditory

system extracts real-time information from the sound signals related to the

acoustic environment, such as geometry and physical properties [153]. An-

other benefit is the possibility of separating and interpreting combined sounds,

especially from sources in different directions [170, 242].

2.2.2 Binaural cues

The sound propagation speed in the air can be assumed to be finite and

approximately constant, considering it as an approximately non-dispersive

medium [18]. Thus, when the incidence is not directly frontal or rear, the

wavefront travels through different paths to the ears, reaching them at differ-

ent times. The time interval between that a sound takes to arrive on both

ears is commonly expressed in the literature as Interaural Time Difference

(ITD) [39]. It is crucial cue for sound source localization in low-frequency

sounds [39, 153, 242]. Moreover, it is considered the primary localization

cue [306]. For continuous pure tone signals and other periodic signals, the

ITD can be expressed as the time Interaural Phase Difference (IPD) [285].

On the other hand, most mammals’ high-frequency sound source localization

is based on a comparative analysis of sound energy in each ear’s frequency

bands, the Interaural Level Difference (ILD). The named duplex theory sur-

mises ITD cues as the basis to sound localization of low-frequency and ILD

cues to high-frequency. The authorship of this is assigned to Lord Rayleigh at

the beginning of the last century [246]. These binaural cues are related to the

azimuthal position. However, they do not present the same success explaining

the localization on elevated positions [37, 250]. An ambiguity in binaural cues

caused by head symmetry and referred to as the cone of confusion [296] can

create difficulties to a correct sound source localization. The cone of confusion

is the imaginary cone extended sideways from each ear where sound source

Chapter 2. Human Binaural Hearing 11

locations will create the same interaural differences (see Figure 2.1).

Figure 2.1: Two-dimensional representation of the cone of confusion.

Head movements are essential for resolving the ambiguous cues from sound

sources located on the cone of confusion. As the person moves their head,

they change the reference and the incidence angle helping them to solve the

duality. This change is reflected in the cues associated with directional sound

filtering caused by the human body’s reflection, absorption, and diffraction.

2.2.3 Monaural cues

Monoaural cues are related to spatial impression, especially in the localization

of elevated sound sources. These cues give, to some extent, some limited but

crucial localization abilities to people with unilateral hearing loss [72, 307].

This type of cue is centered on instant level comparison and frequency changes.

As the level of a continuous enough sound source changes, the approximation

or distancing of that source can be estimated. Furthermore, when there are

head movements that shape the frequency content, the disturbance, mainly

the pinnae provide, can benefit the listener to learn the position of a sound

source [129, 292]. In addition, the importance of the previous knowledge of

the sound to the deconvolution process is also investigated, revealing mixed

results [307].

Chapter 2. Human Binaural Hearing 12

2.2.4 Head-related transfer function

The Head-Related Transfer Function (HRTF) describes the directional filtering

of incoming sound due to human body parts such as the head and pinnae [189].

The free-field HRTF can be expressed as the division of the impulse responses

in the frequency domain measured at the entrance to the ear canal and the

center of the head but with the head absent [108] (see Figure 2.2).

HRTFs depend on the direction of incidence of the sound and are generally

measured for some discrete incidence directions. Mathematical models can

also generate individualized HRTFs based on anthropometric measures [52] or

through geometric generalization [70].

Figure 2.2: A descriptive definition of the measured free-field HRTF for a given

angle.

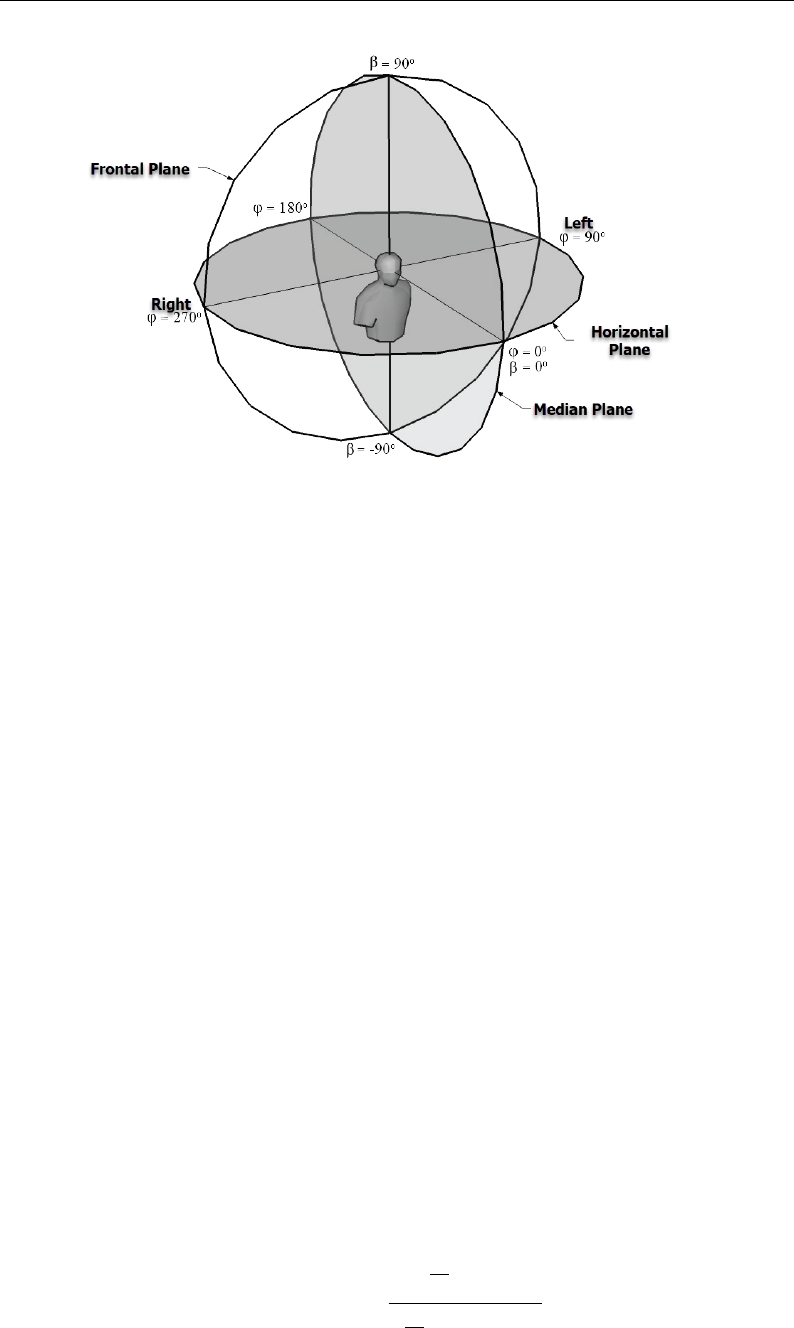

The referential system related to the head can be seen in Figure 2.3, where

β is the elevation angle in the midplane, and ϕ is the angle defined in the

horizontal plane.

Chapter 2. Human Binaural Hearing 13

Figure 2.3: Polar coordinate system related to head incidence angles, adapted from

Portela [240].

Suppose the distance to the sound source exceeds 3 meters. In that case, it can

be considered approximately a plane wave, thus making the previous HRTFs

almost independent of the distance to the sound source [38]. Blauert [39] also

explain two other types of HRTF, namely:

• Monaural Transfer Function (MTF): relates the sound pressure, at a

measurement point in the ear canal, from a sound source at any position

to a sound pressure measured at the same point, with a sound source at

a reference position (ϕ = 0 and β = 0). MTF is given by

MTF =

P

i

P

1

r,ϕ,β,f

P

i

P

1

ϕ=0

◦

,β=0

◦

,f

, (2.1)

where p

i

it can be p

1

, p

2

, p

3

or p

4

.

– p

1

sound pressure in the center of the head position with the listener

Chapter 2. Human Binaural Hearing 14

absent;

– p

2

sound pressure at the entrance of the occluded ear canal;

– p

3

sound pressure at the entrance to the ear canal;

– p

4

: eardrum sound pressure.

• Interaural Transfer Function (ITF): relates the sound pressures at corre-

sponding measurement points in the two auditory canals. The reference

pressure will then be the ear that is directed towards the sound source.

The ITF can be obtained through

ITF =

P

i Opposite side of the source

P

i Side facing the source

. (2.2)

More considerable variations are seen above 200 Hz in HRTFs [293] because

the head, torso, and shoulders begin to significantly interfere in frequencies

up to approximately 1.5 kHz (mid frequencies). In addition, the pinna and

the cavum conchae (space inside the most inferior part of the helix cross; it

forms the vestibule that leads into the external acoustic meatus [270]) distort

frequencies greater than 2 kHz.

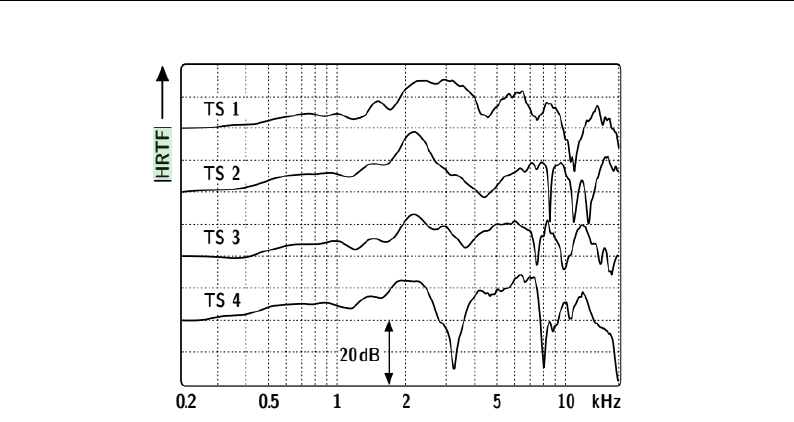

HRTF measurements vary from person to person, as seen in Figure 2.4, where

TS 1, TS 2, TS 3, and TS 4. represent HRTFs of different people. When

recording using mannequins or different people’s ear canals (non-individualized

HRTFs), the reproduction precision in terms of spatial location and realism

tends to be diminished [51, 178]. This poorer precision is because the transfer

function will differ for each individual, especially at high frequencies [155].

This dependence is related to the wavelength and the singular irregularity of

the ear canal of each human being [38].

Chapter 2. Human Binaural Hearing 15

Figure 2.4: Head-related transfer functions of four human test participants, frontal

incidence, from Vorl¨ander [293].

Binaural Impulse Response A Binaural Room Impulse Response (BRIR)

results from a measurement of the response of a room to excitation from an

(ideally) impulsive sound [183]. The BRIRs are composed of a sequence of

sounds. Parameters like the magnitude and the decay rate, the phase, and

time distribution are the key to understanding how a BRIR can audibly char-

acterize a room to a human perception [167]. Albeit the air contains a small

portion of Co2 that is dispersive, sound propagation velocity can be considered

homogeneous in the air (non-dispersive medium) [312] for the Room Impulse

Responses (RIRs). The first sound from a source that reaches a receptor inside

the room travels a smaller distance, and it is called direct sound (DS). Usually,

the following sounds result from reflections that travel a longer path, losing

energy on each interaction and resulting in an exponential decay of magnitude.

The BRIR is proposed to collect the room information as a regular Impulse

Response, although having two sensors separated as the typical human head.

Nowadays, BRIR can be recorded with small microphones placed in the ear

canal of a person or utilizing microphones placed in mannequins [197].

A BRIR is the auditory time representation of a set source-receptor defined

by its position, orientation, acoustic properties as directionality of the sound

Chapter 2. Human Binaural Hearing 16

source, as well as from the physical elements within the environment [38, 108].

The convolution of BRIR with audio signals is a feasible task for modern

computation, which allows the creation and manipulation of sounds even in

real-time applications [62, 217]. Thus, it is possible to impose spatial and

reverberant characteristics of different spaces to a given sound [109].

2.2.5 Subjective aspects of an audible reflection

The impulse response is composed of the direct sound followed by a series of

reflections (initial and later reflections) [45, 165]. Essential knowledge on how

the human auditory system processes the spectral and spatial information

contained in the impulse response has been obtained through studies with

simulated acoustic fields. [6, 17, 93, 125, 141, 174, 176, 188, 193, 257, 305,

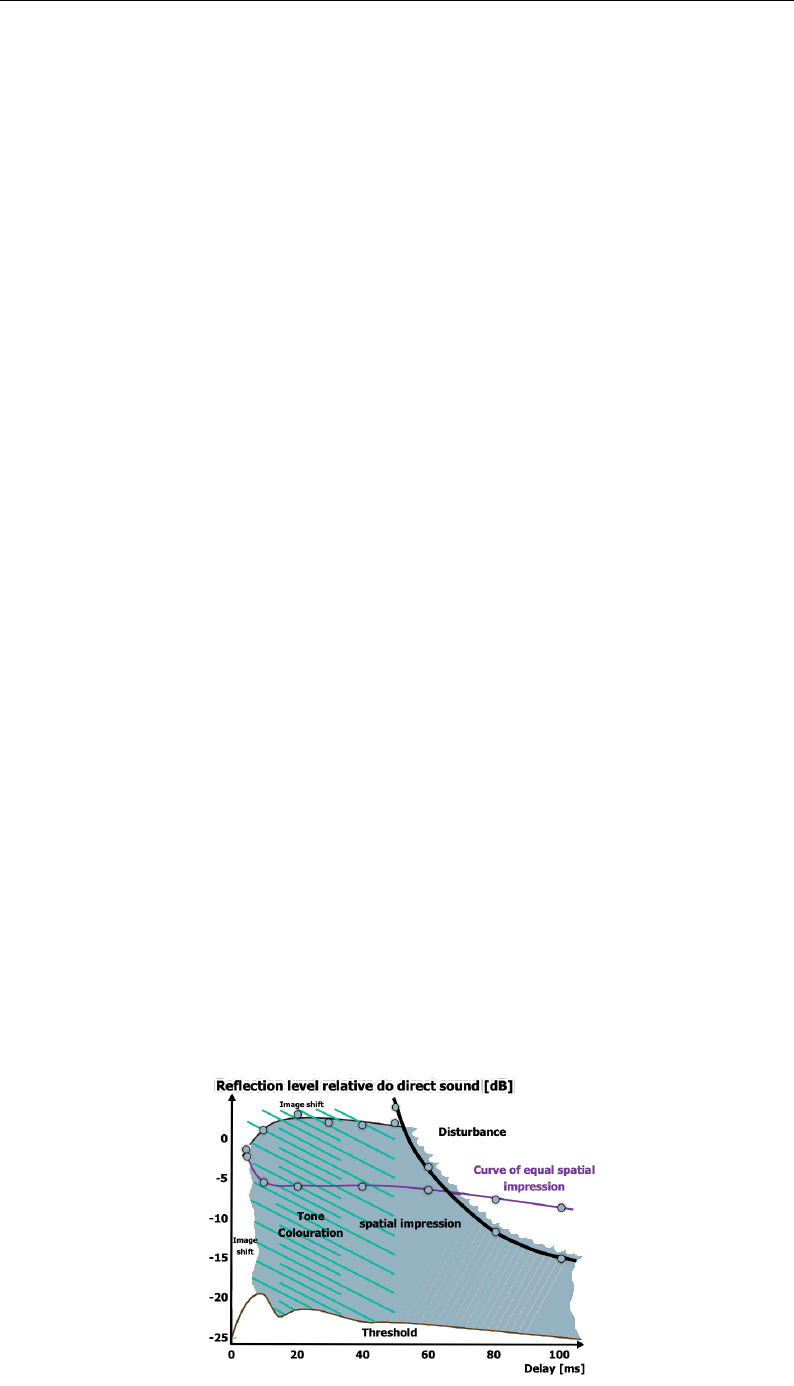

321]. The results of Barron’s experiments, depicted in Figure 2.5, involved

the reproduction of both a direct sound and a lateral reflection. These two

auditory stimuli were manipulated in terms of their time delay and relative

amplitude, with the goal of eliciting subjective impressions correlated to these

factors. By varying the time between the direct sound and the reflection, as

well as the relative amplitude of these stimuli, it was possible to understand

better how these characteristics impact the overall auditory experience.

Figure 2.5: Audible effects of a single reflection arriving from the side (adapt from

Rossing [254]).

Chapter 2. Spatial Sound & Virtual Acoustics 17

The audibility threshold curve indicates that the reflection will be inaudible if

the delay or the relative level is minimal. The reflection’s subjective effect also

depends on the direction of incidence of the sound source in the horizontal and

vertical plane. It is possible to note that for delays of up to 10 milliseconds,

the relative difference in level must be at least -20 dB for the reflection to be

noticeable.

The echo effect is typically observed in delays of more than 50 milliseconds,

being an acoustic repetition with a high relative level—approximately the same

energy as the direct sound. The coloring effect is associated with the significant

change in the spectrum caused by the constructive and destructive interference

of the superposition of sound waves.

The image change happens when there are reflections with relative levels higher

than direct sound or minimal delays. In this case, the subjective perception is

that the sound source has a different position in space than the visual system

perceives.

2.3 Spatial Sound & Virtual Acoustics

The sound perceived by humans is identified and classified based on physical

properties, such as intensity and frequency [242]. Human beings are equipped

with two ears (two highly efficient sound sensors), enabling a real-time compar-

ison of these properties between the captured sound signals [9]. The sounds

and the dynamic interaction between sound sources, their positions, move-

ments, and the physical interaction of the generated sound waves with the

environment can be perceived by normal-hearing people, providing what is

called spatial awareness [153]. That auditory spatial awareness includes the

localization of the sound source, estimation of distance, and estimation of the

Chapter 2. Spatial Sound & Virtual Acoustics 18

size of the surrounding space [38, 305]. A person with hearing loss may lose

this ability partially or entirely; the spatial awareness is also tied to the lis-

tener’s experience with the sound and the environment, motivation, or fatigue

level [54, 304].

In the field of virtual acoustics, the ultimate goal is to generate a sound event

that elicits a desired auditory sensation, creating a Virtual Sound Environment

(VSE) [293]. In order to achieve this, it is necessary to synthesize or record

the acoustic properties of the target scene and subsequently reproduce them

in a manner that accurately reflects the original acoustic conditions [97]. This

involves a careful consideration of the various factors that contribute to the

overall auditory experience, including the spectral and spatial characteristics

of the sound. By accurately recreating these properties, it is possible to create

a highly immersive and realistic VSE that effectively conveys the intended

auditory experience to the listener [196, 213, 293].

2.3.1 Virtualization

Nowadays, it is possible to create audio files containing information about

sound properties related to a specific space [293]. For example, it is possible to

encode information about the source and receptor position, the transmission

path, reflections on surfaces, and the amount of energy absorbed and scattered

(e.g., Odeon [59], a commercially available acoustical software). The sound

field properties can be simulated, synthesized, or recorded in-situ [113, 293].

These signals can be encoded and reproduced correctly in various reproduction

systems [122, 161]. The creation of files that can be reproduced containing

such information is called auralization. As different interpretations of the

terms occur in literature, in this thesis, the virtualization process is considered

to encompass the auralization and the reproduction of a sound (recorded,

Chapter 2. Spatial Sound & Virtual Acoustics 19

simulated, or synthesized) that includes spatial properties.

2.3.1.1 Auralization

Auralization is a relatively recent procedure. The first studies were conducted

in 1929; Spand¨ock and colleagues tried to process signals measured in a scale-

created room. After that, in 1934, Spand¨ock [280] succeeded in the first aural-

ization, in the analogical way, using ultrasonic signals of scale models recorded

in magnetic tapes. In 1962 Schroeder [263] incorporated the computing pro-

cess into the auralization. In 1968 Krokstad [146] developed the first acoustic

room simulation software. The term auralization was introduced in the litera-

ture by Kleiner in 1993: “Auralization is the process of rendering audible, by

physical or mathematical modeling, the soundfield of a source in a space, in

such a way as to simulate the binaural listening experience at a given position

in the modeled space.” (Kleiner [138])

In his book titled Auralization, published in 2008, Vorl¨ander defined: “Aural-

ization is the technique of creating audible sound files from numerical (simu-

lated, measured, or synthesized) data.” (Vorl¨ander [293])

In this work, auralization is understood as a technique to create files that can

be executed as perceivable sounds. An auralization method describes the tech-

nique; it can involve one or more auralization techniques. These sounds can

then be virtualized (reproduced) via loudspeakers or headphones and provide

audible information about a specific acoustical scene in a defined space, fol-

lowing Vorlander’s definition. That was chosen to encourage the separation of

the process as an auralized sound file can contain information that allows it to

be decoded in different reproduction systems [320].

Auralization is consolidated in architectural acoustics [45, 148, 165, 254], and

Chapter 2. Spatial Sound & Virtual Acoustics 20

it is also emerging in environmental acoustics [19, 68, 69, 139, 162, 231, 232].

This technique allows a piece of audible information to be easily accessed and

understood. It is also an integral part of the entertainment industry in games,

movies, and virtual or mixed reality [320]. Knowing an environment’s acoustic

properties allows it to manipulate or add synthesized or recorded elements,

leading the receiver to the desired auditory impression, including the sound’s

spatial distribution [62]. This process is also used in hearing research, allowing

researchers to introduce more ecologically valid sound scenarios to their study

(See Section 2.3.4).

Sound spatiality, or the perception of sound waves arriving from various direc-

tions and the ability to locate them in space, is a crucial aspect of the auditory

experience [40]. Auralization, which is analogous to visualization, involves the

representation of sound fields and sources, the simulation of sound propaga-

tion, and the strategy to decode in the spatial reproduction setup [293]. That

is typically achieved through tri-dimensional computer models and digital sig-

nal processing techniques, which are applied to generate auralizations that can

be reproduced via acoustic transducers [293].

The modeling paradigm used to create the spatial sensation can be percep-

tually or physically based [39, 106, 164, 276]. Multiple dimensions influ-

ence sound perception; the type of generation of the sound, the wind direc-

tion, the temperature, the source and the receptor movement, space (size,

shape, and content), receptor’s spatial sensitivity, and source directivity are

some examples. That implies the importance of physical effects as Doppler

shifts [96, 284, 293]. Furthermore, the review of room acoustic and psychoa-

coustics elements (See Section 2.3.3) corroborates the auralization modeling

procedure’s understanding.

Chapter 2. Spatial Sound & Virtual Acoustics 21

2.3.1.2 Reproduction

Sound signals containing acoustic characteristics of a space can be reproduced

either with binaural techniques (headphones or loudspeakers) or with multiple

loudspeakers (multichannel techniques) [293]. Moreover, an acoustic model

for a space can be analytically or numerically implemented, having a series

of competent algorithms and commercial software and tools available [49].

With that, it is also possible to measure micro and macro acoustic properties

for materials in a laboratory or in-situ [206] and access databases of various

coefficients and indexes to an extended catalog of materials [50, 71, 158, 266].

On the reproduction end of the virtualization process, factors such as fre-

quency and level calibration, signal processing, and the frequency response of

the hardware can significantly impact the accuracy of the final sound (e.g., the

orientation/correction of the microphone when calibrating the system [274]).

Depending on the chosen paradigm, a lack of attention to these details may

disrupt an accurate description of the sound field, sound event, or sound sen-

sation [214, 282, 283, 320]. Additionally, the quality of the stimuli may be

compromised depending on the chosen reproduction technique, which is often

tied to the hardware available [77, 166, 275, 276]. That can lead to undesired

effects on the level of immersion and problems with the accuracy of sound

localization and identification (e.g., source width, source separation, sound

pressure level, and coloration and spatial confusion effects [97]). The process

of building a VSE is called sound virtualization, which involves both the aural-

ization and reproduction stages to create audible sound from a file. The main

technical approaches or paradigms for reproducing auralized sound are Binau-

ral, Panorama, and Sound Field Synthesis (Section 2.3.2). These paradigms

can be distinguished by their output, which can be physically or perceptu-

ally motivated. For example, while binaural methods are treated apart, they

can be intrinsically classified in a physically-motivated paradigm since its suc-

Chapter 2. Spatial Sound & Virtual Acoustics 22

cess relies on reproducing the correct physical signal at a specific point in the

listener’s auditory system, typically the entrance of the ear canal [106].

2.3.2 Auralization Paradigms

2.3.2.1 Binaural

Binaural hearing, which refers to the ability to perceive sound in a three-

dimensional auditory space, is a fundamental concept in auditory research and

has been extensively studied by researchers such as Blauert [40]. In the con-

text of auralization, the term ”binaural” refers to the specific paradigm that

aims to reproduce the exact sound pressure level of a sound event at the lis-

tener’s eardrums. That can be achieved through the use of headphones or a

pair of loudspeakers (known as transaural reproduction) [314]. However, when

using distant loudspeakers, it is necessary to consider the interference that can

occur between the sounds coming from each speaker. To mitigate this issue,

techniques such as cross-talk cancellation (CTC) [60, 262] can be employed,

which involve manipulating a set of filters to cancel out the distortions caused

by the sound from one speaker reaching the other ear. Another form of bin-

aural reproduction involves the use of closer loudspeakers that are nearfield

compensated.

Binaural methods over headphones is commonly applied. It requires no ex-

tensive hardware (in simple setups that do not track the listener’s head), pro-

viding a valid acoustic representation and spatial awareness [293]. A Disad-

vantage of this method can include the accuracy dependence of individualized

HRTF (as each human being have his own slightly different anatomic ”fil-

ter set”) [314]. Over headphones also, the movement of the listener’s head

can be disruptive to the immersion [179]. It may require tracking the head’s

Chapter 2. Spatial Sound & Virtual Acoustics 23

movement [11, 115, 252], e.g., when movements are required or allowed in

an experiment. Furthermore, a listener wearing a pair of headphones may

not represent a realistic situation. For example, an experiment with a virtual

auditory environment that represents a regular daily conversation with aged

participants may lose the task’s ecological validity. Also, usually, headphones

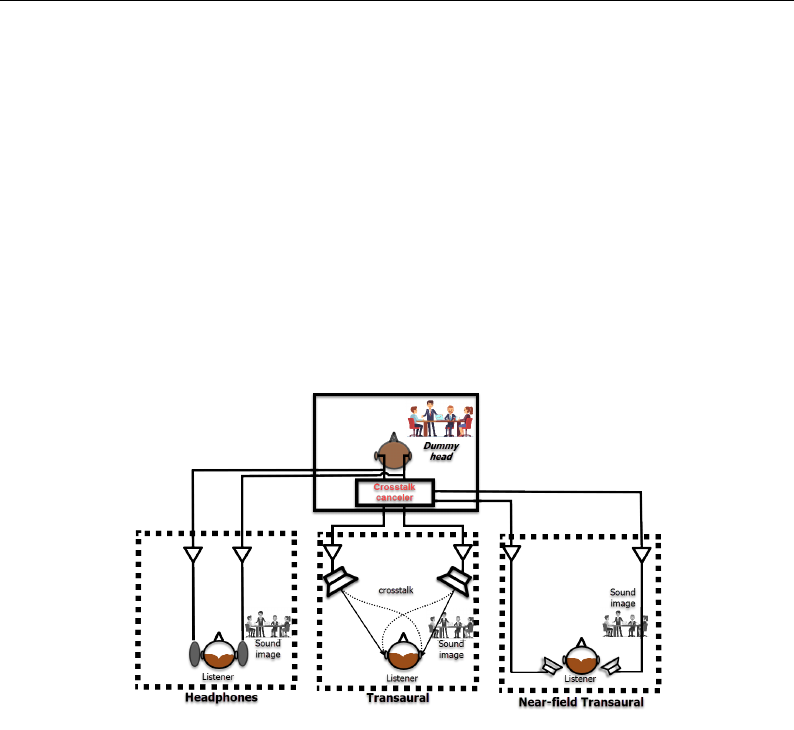

prevent the listener from wearing hearing devices. Figure 2.6 illustrates the

main idea behind different binaural reproduction setups.

Figure 2.6: Binaural reproduction setups: Headphones, transaural and near-field

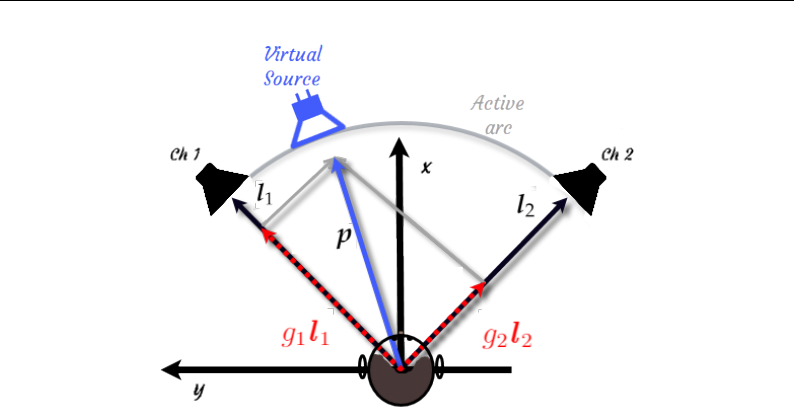

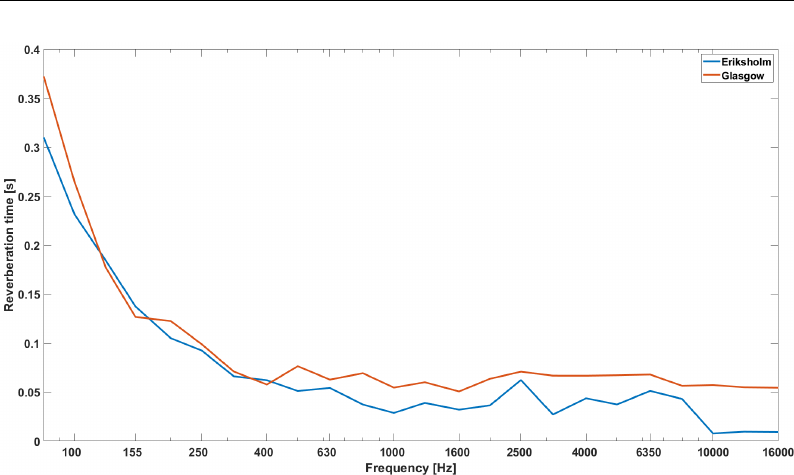

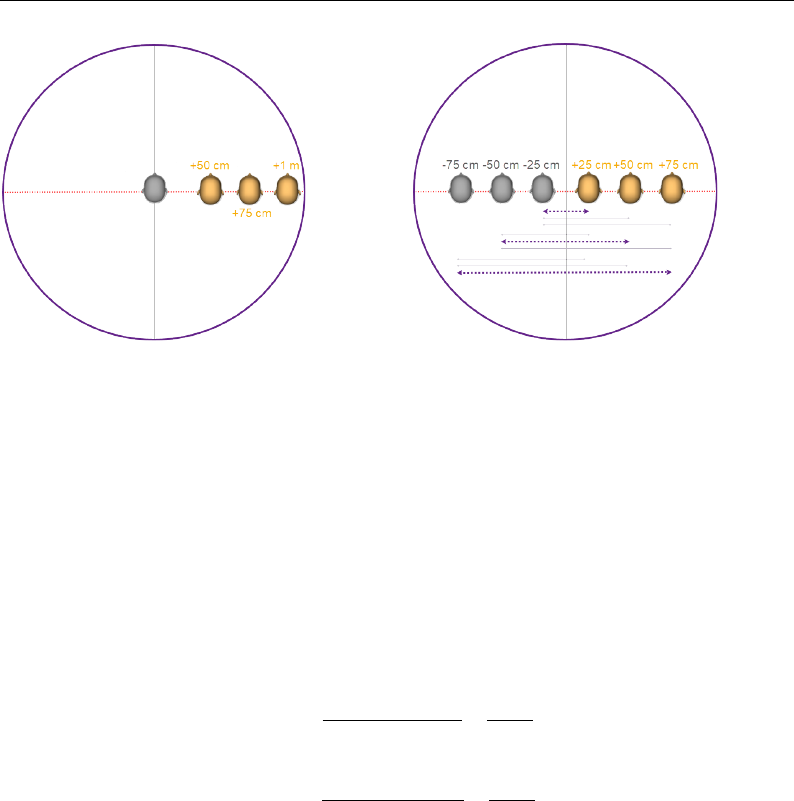

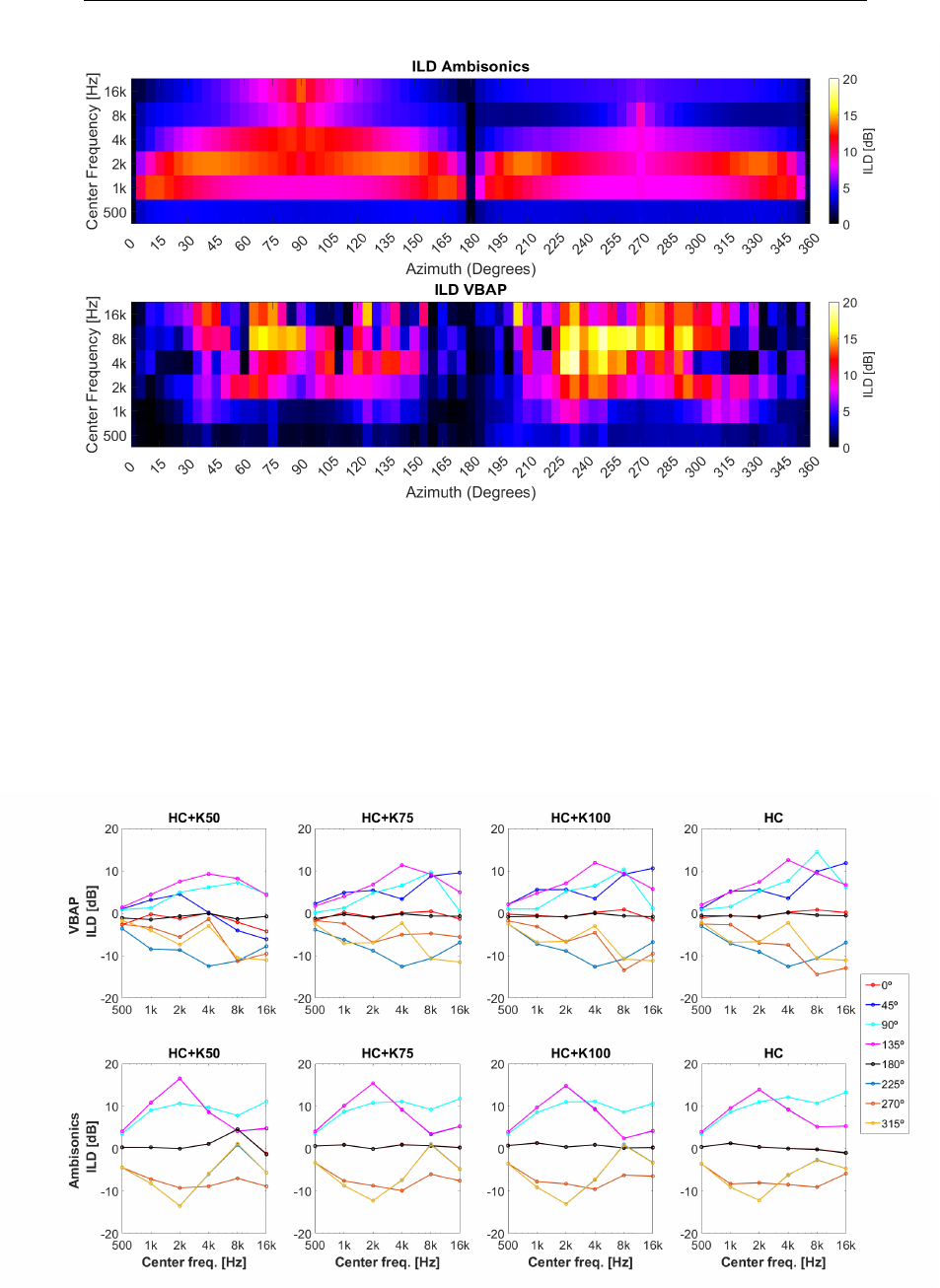

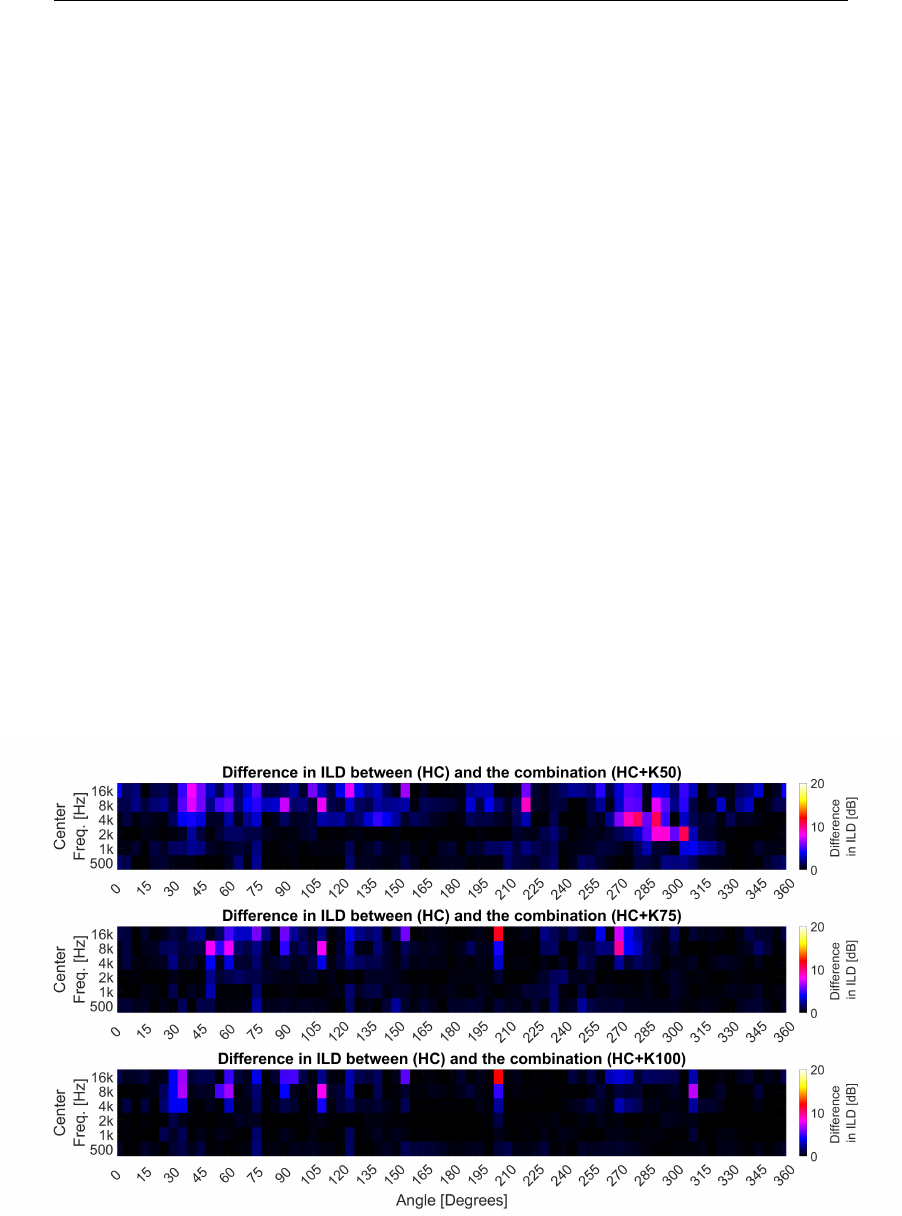

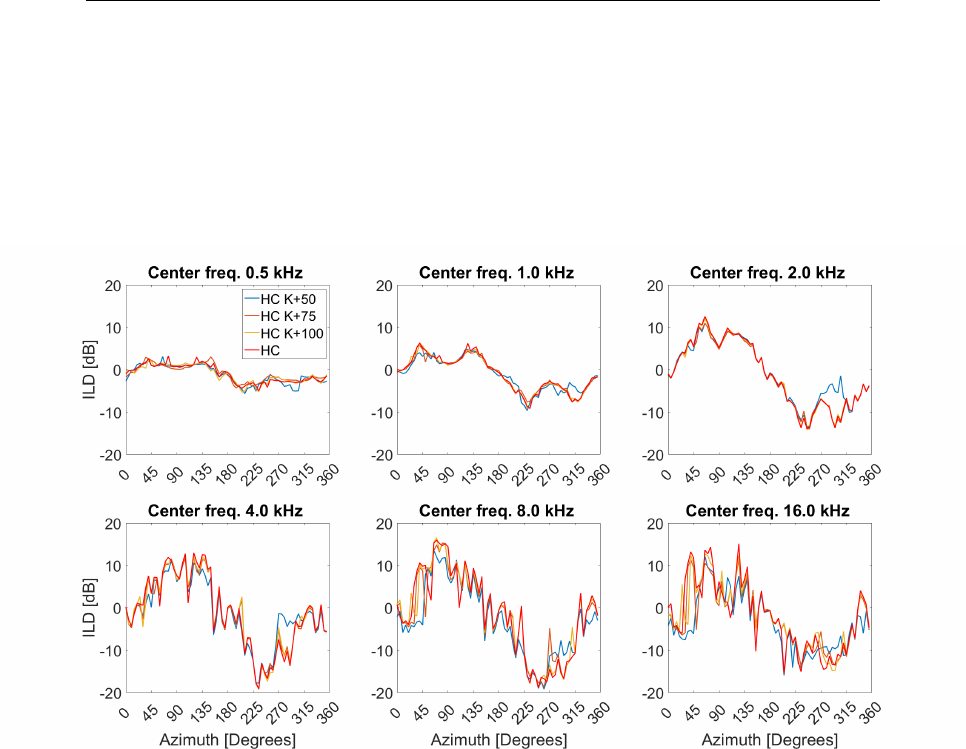

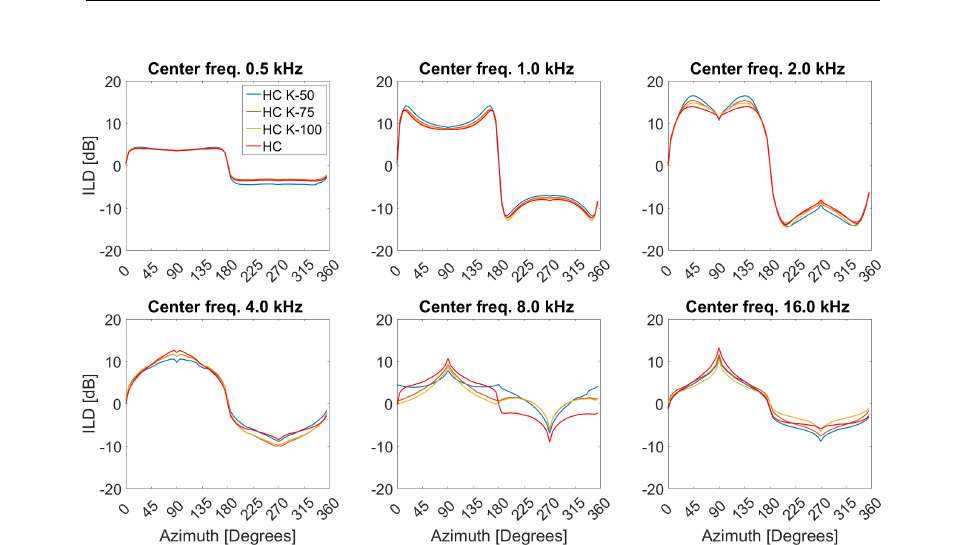

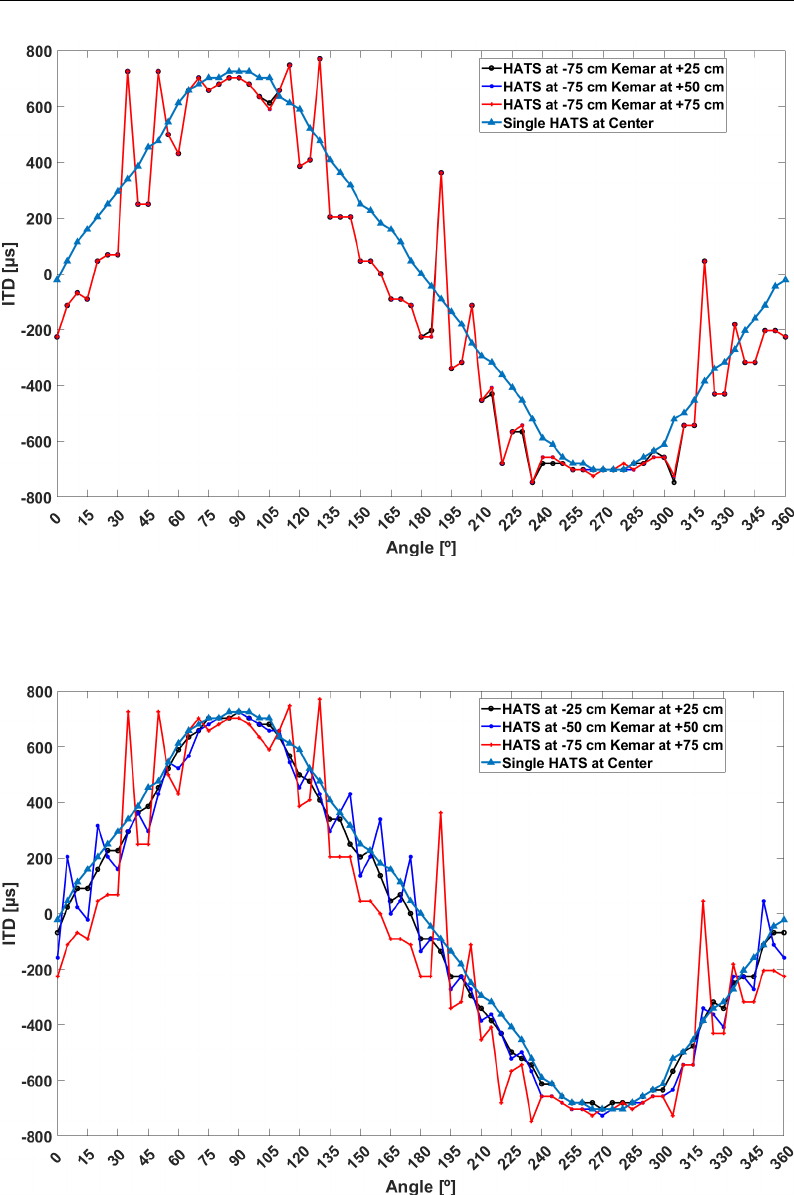

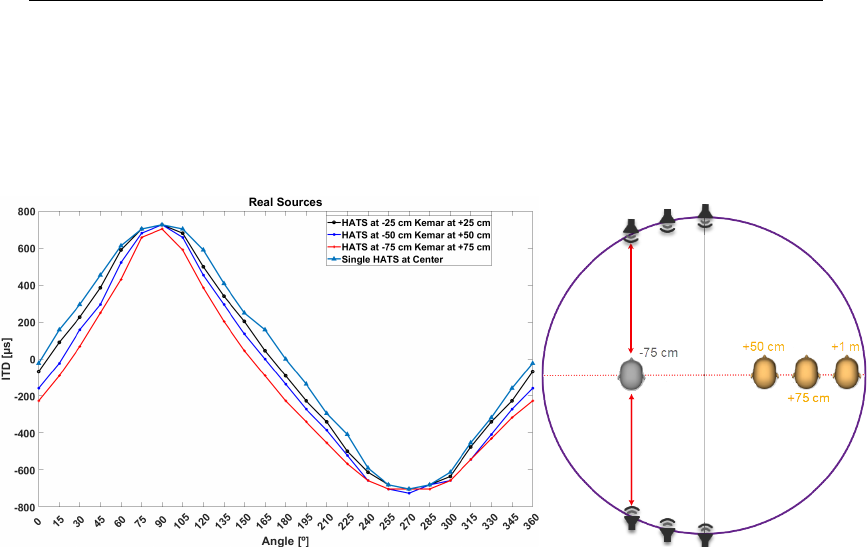

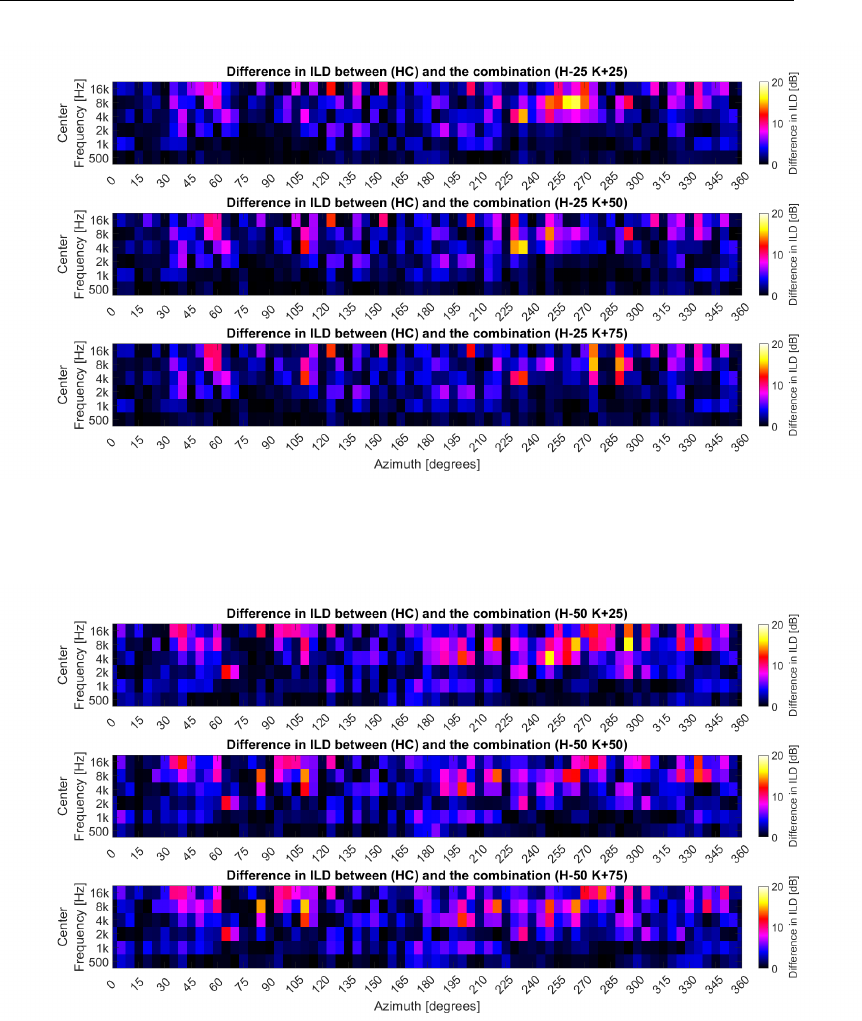

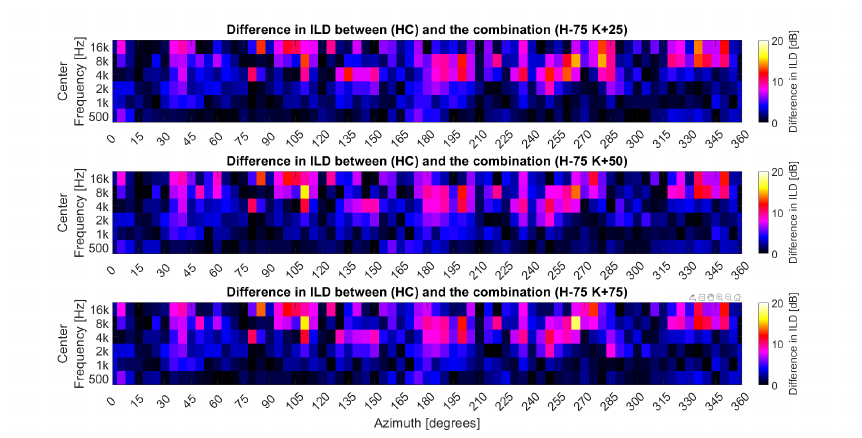

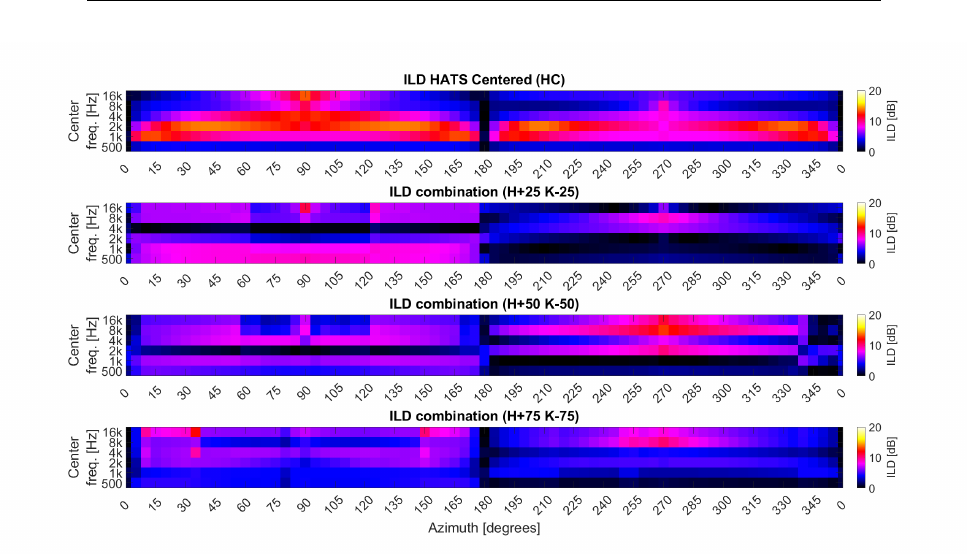

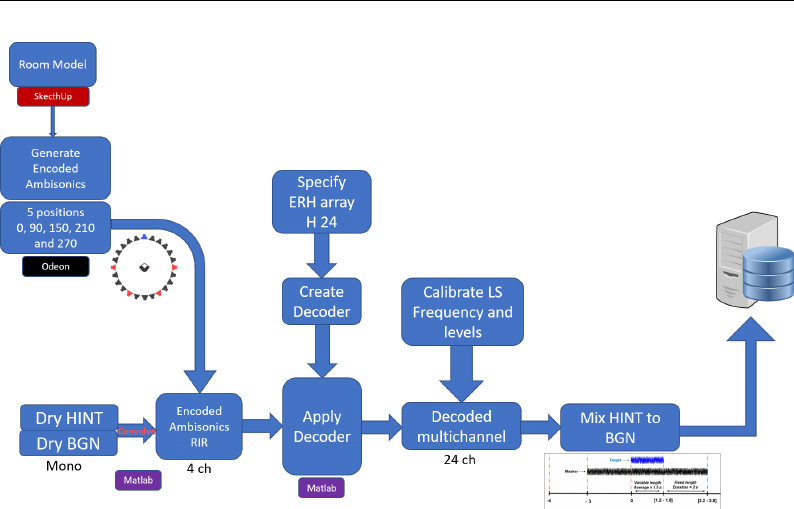

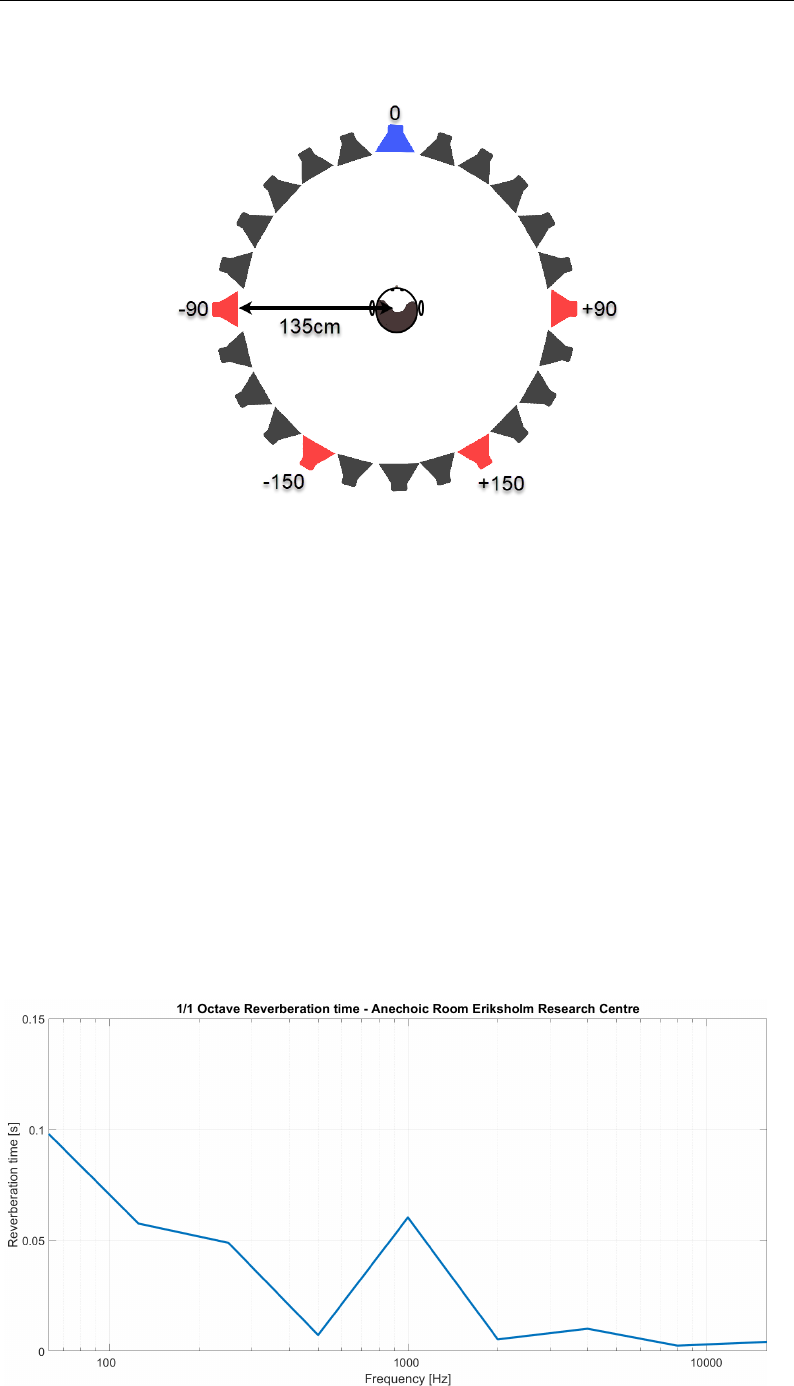

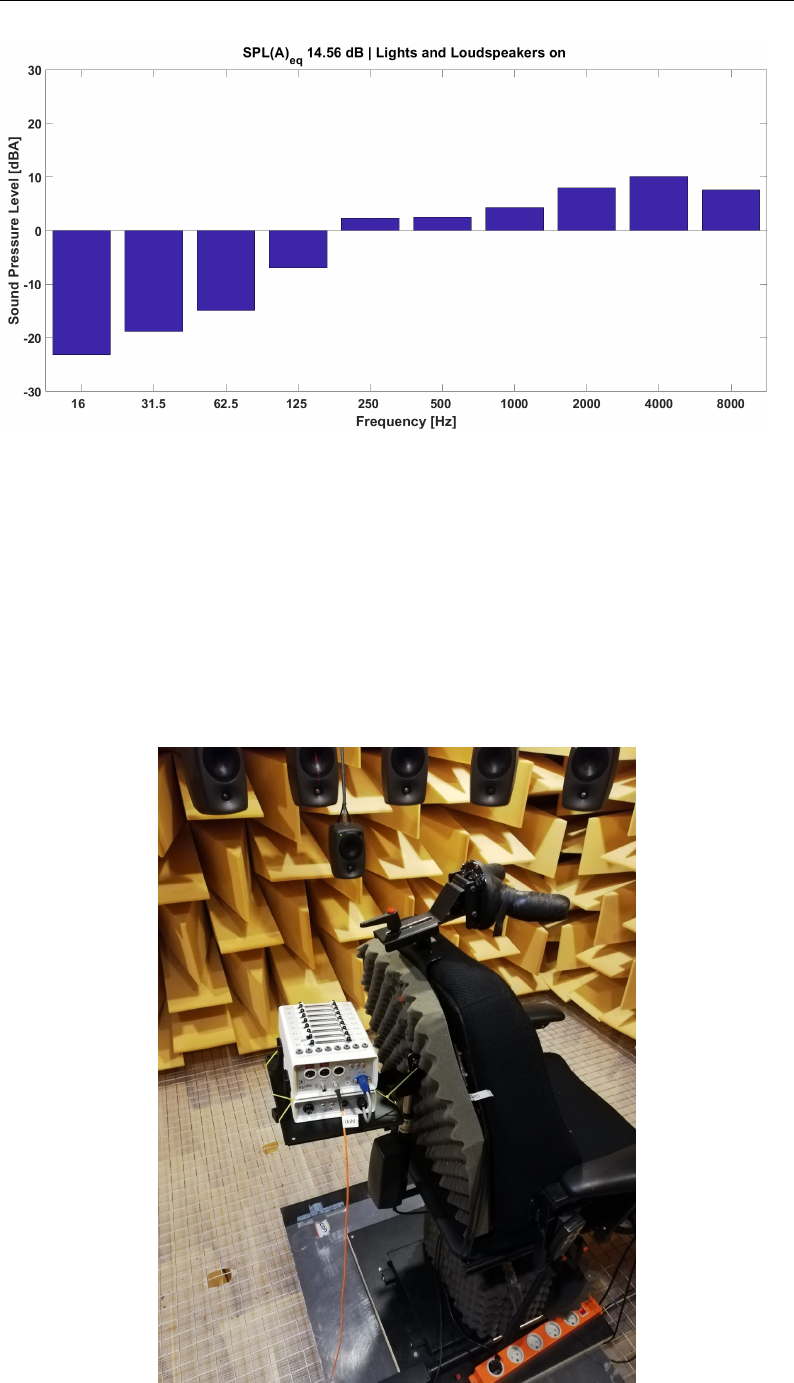

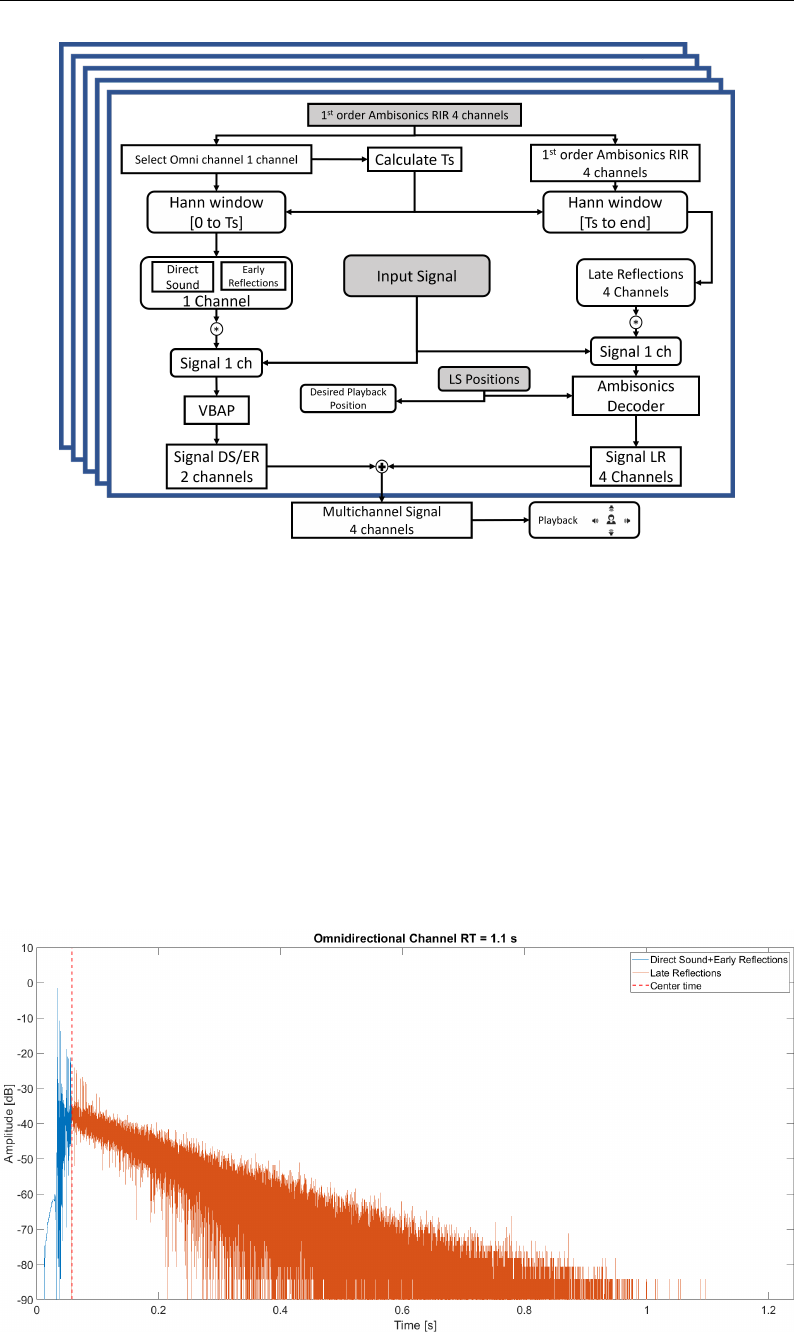

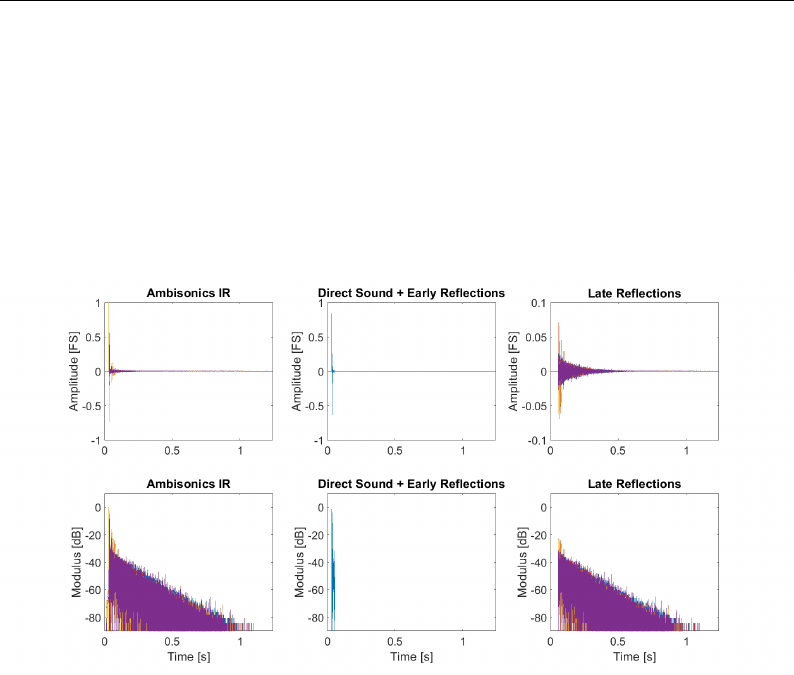

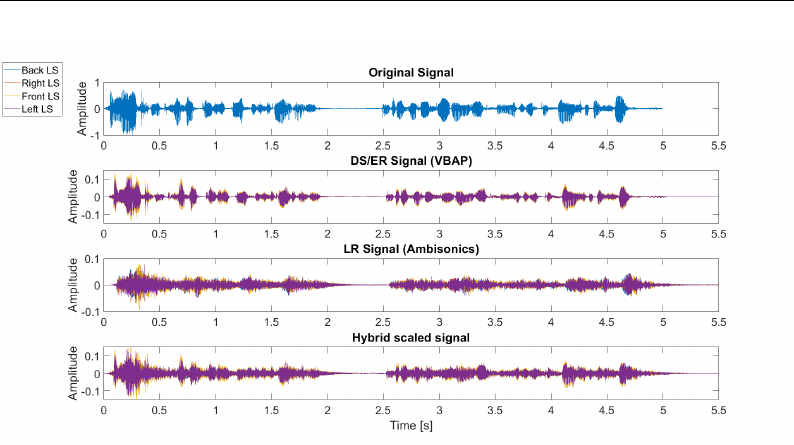

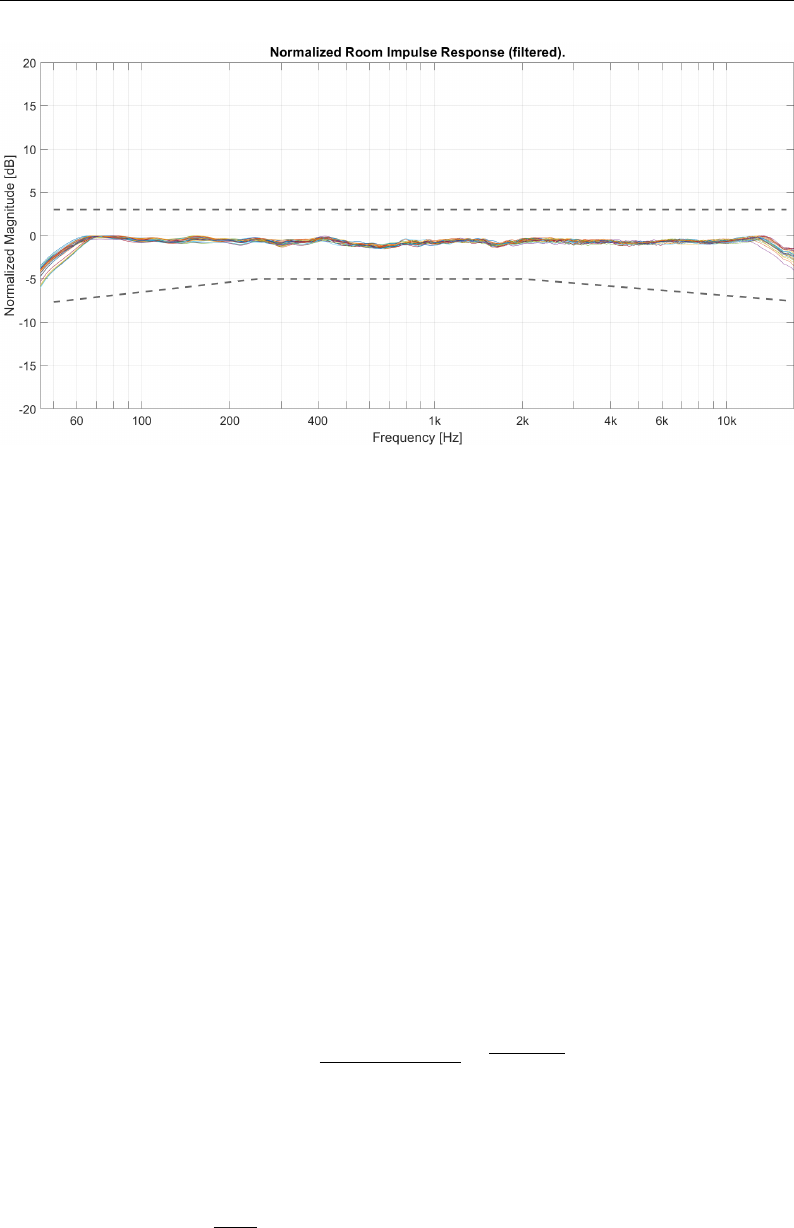

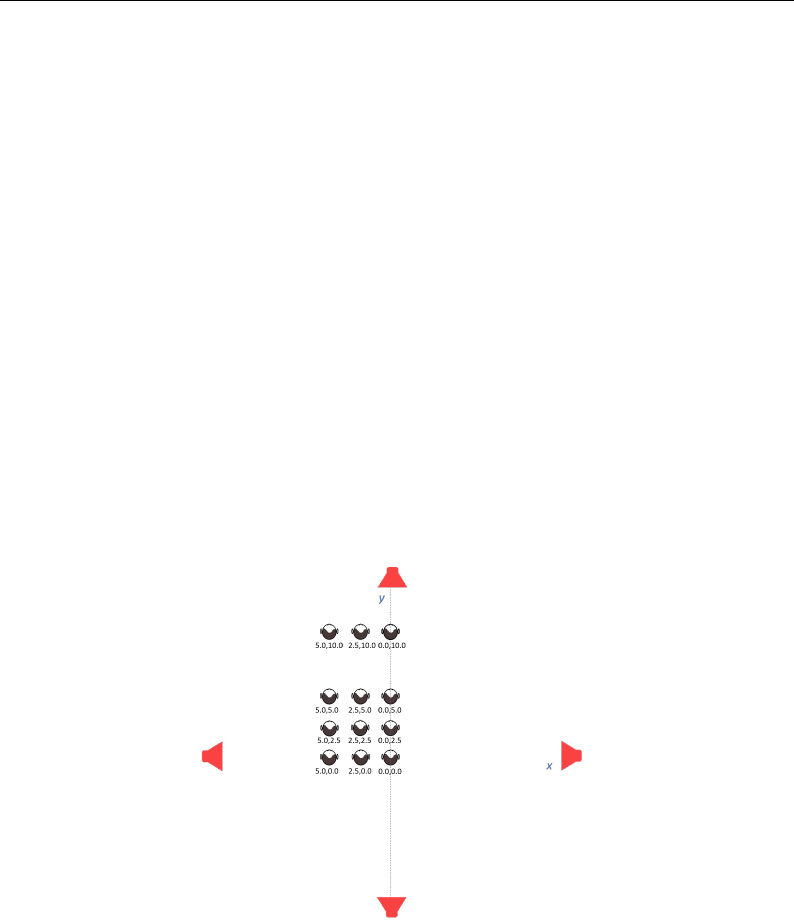

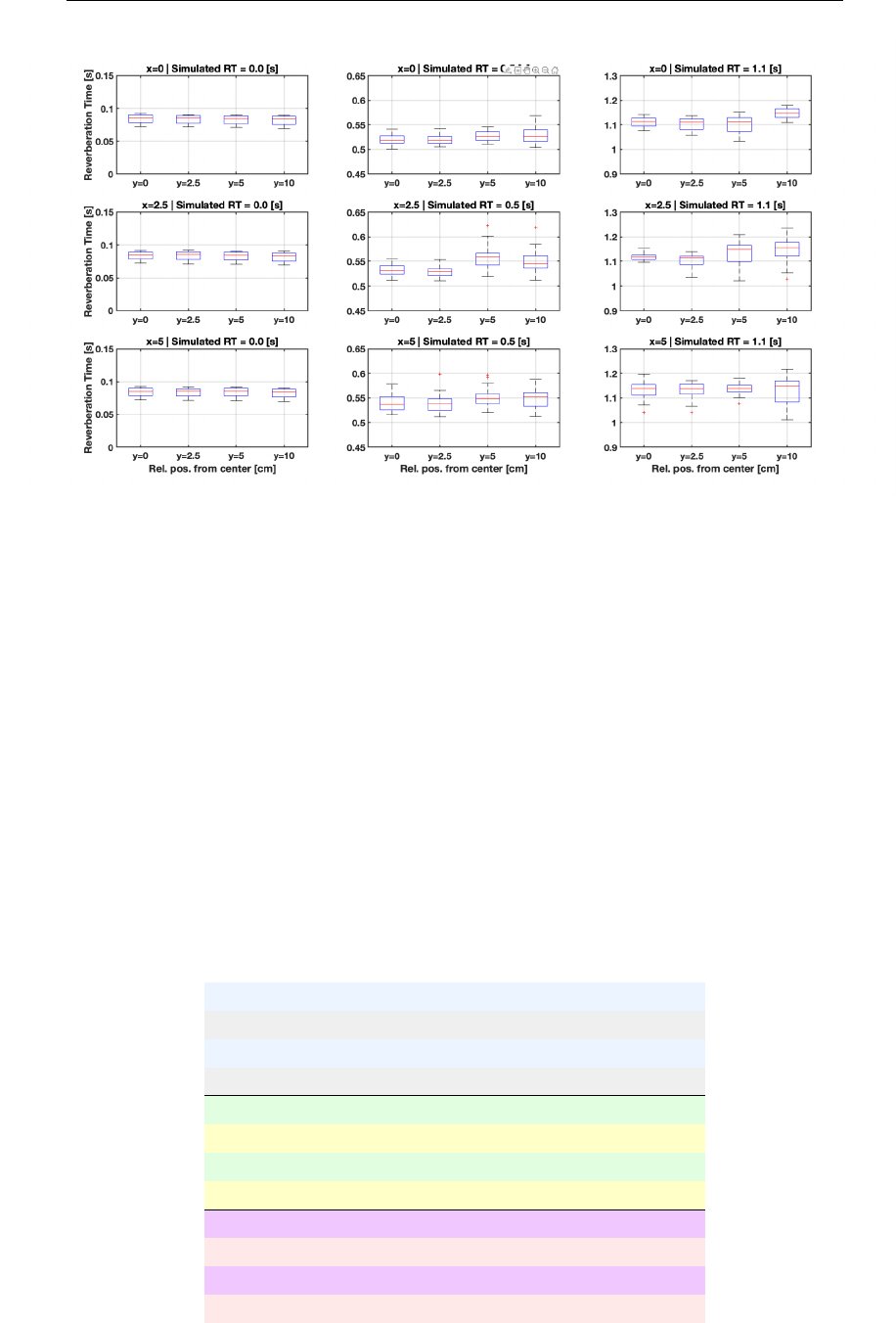

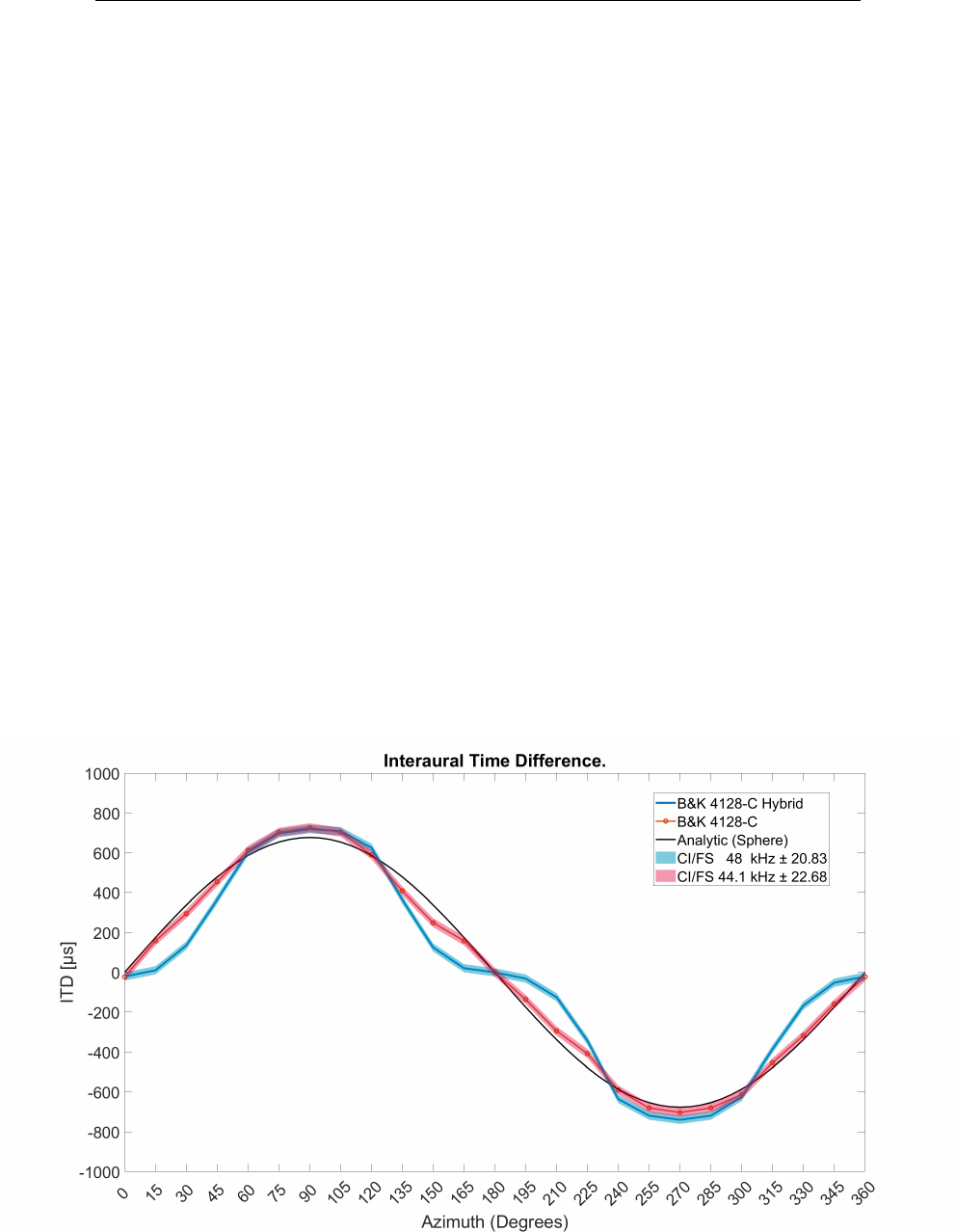

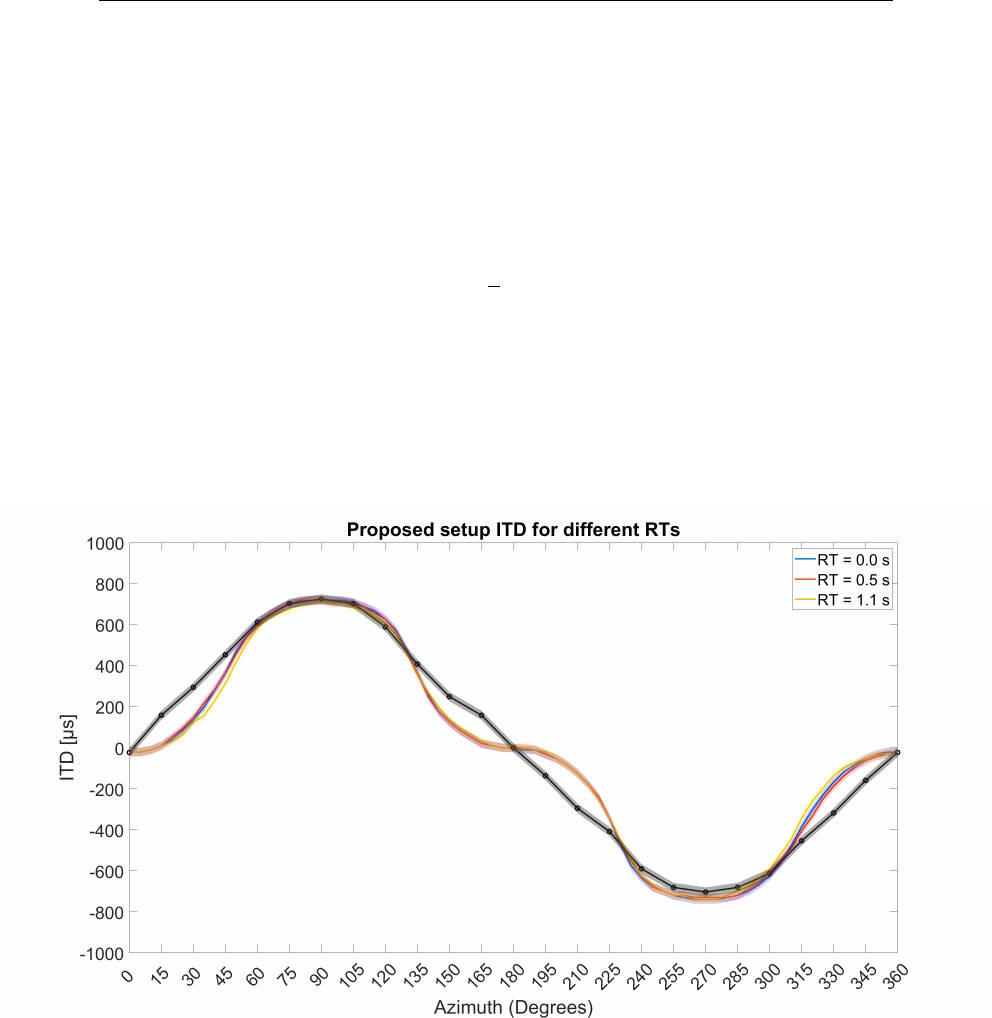

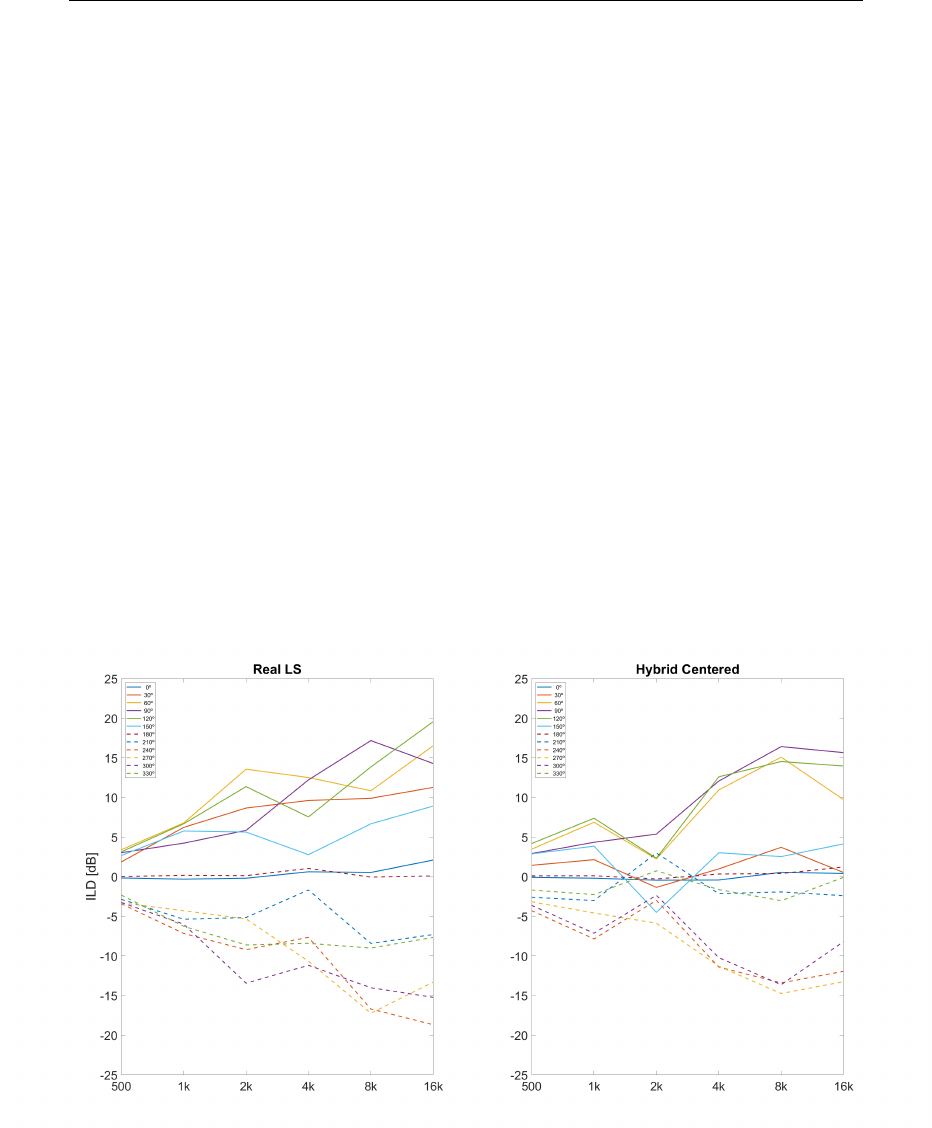

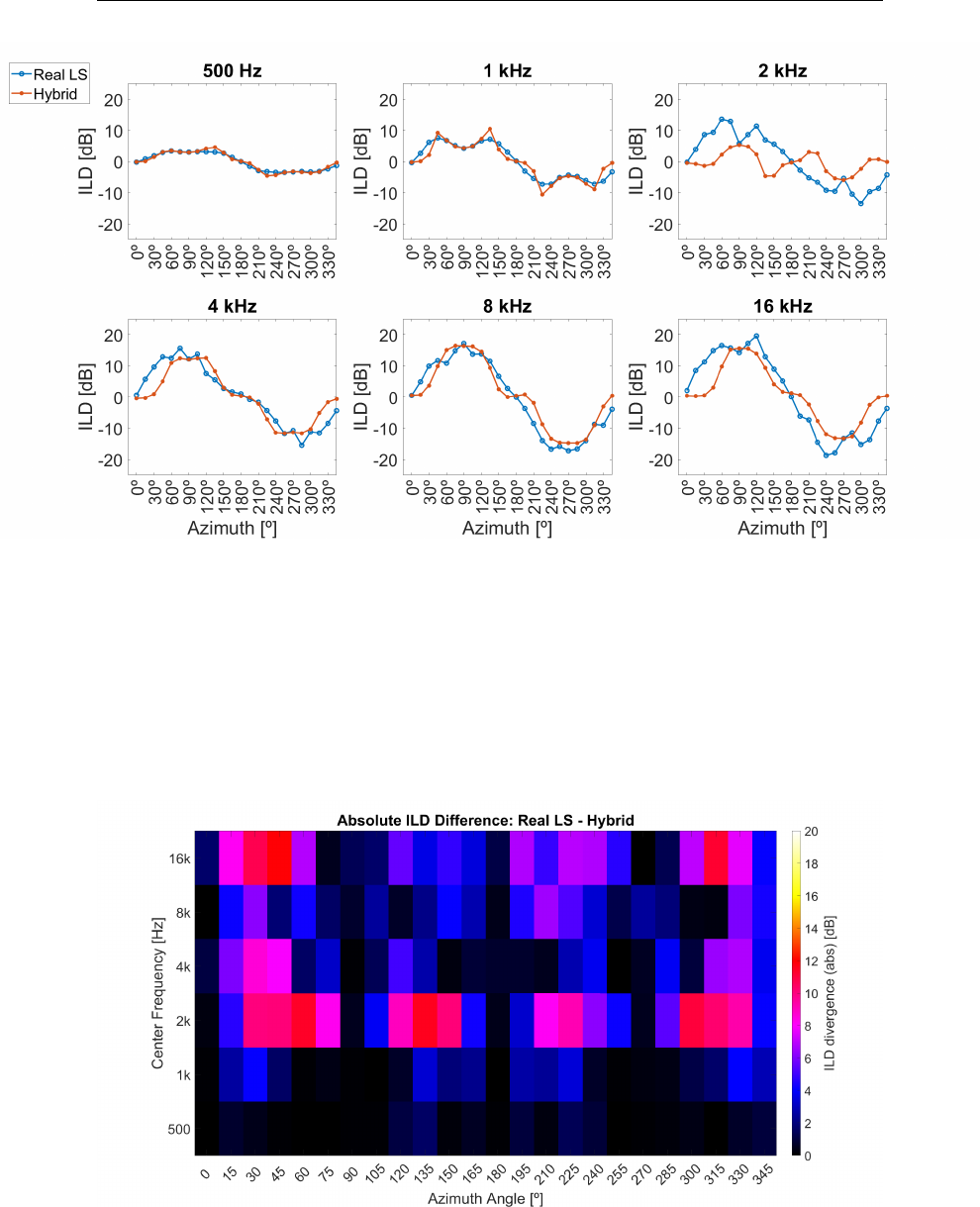

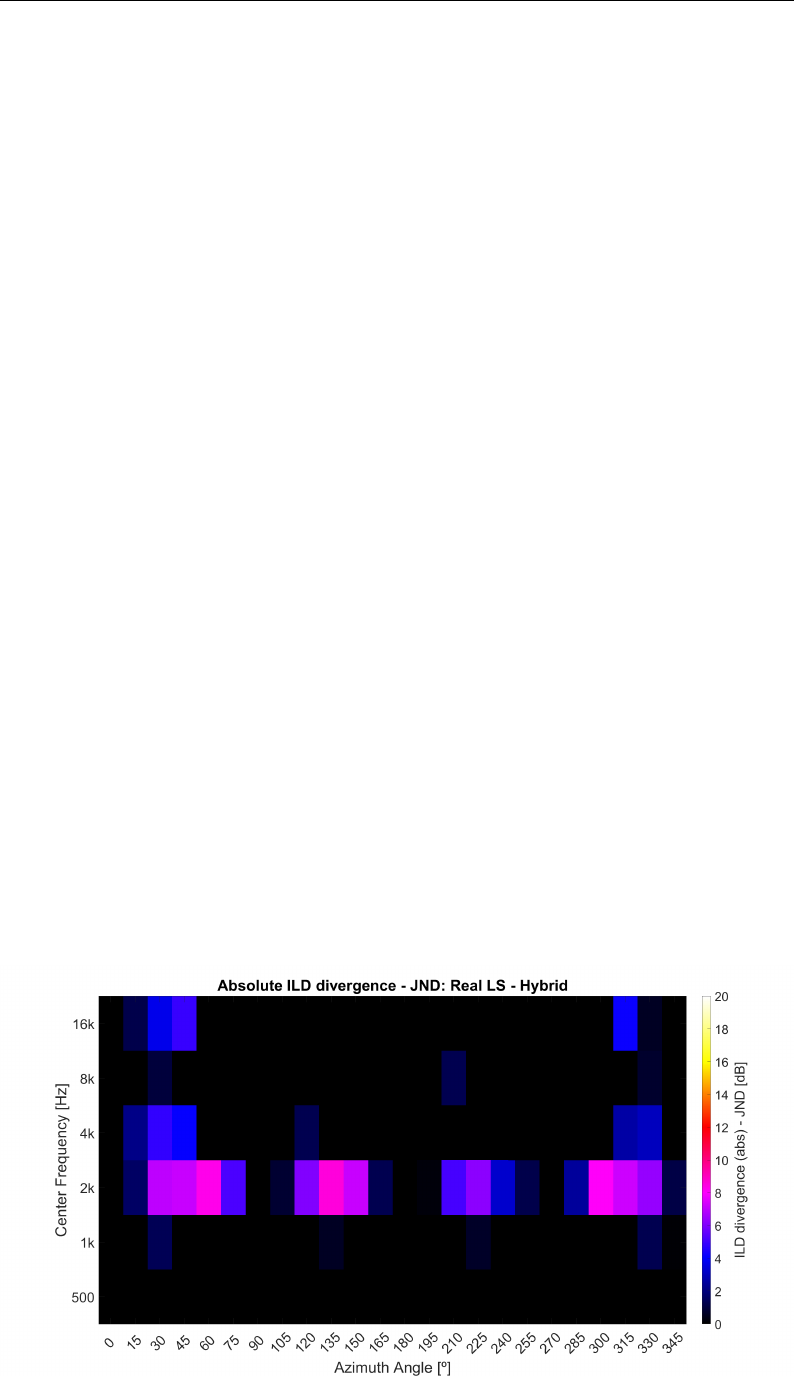

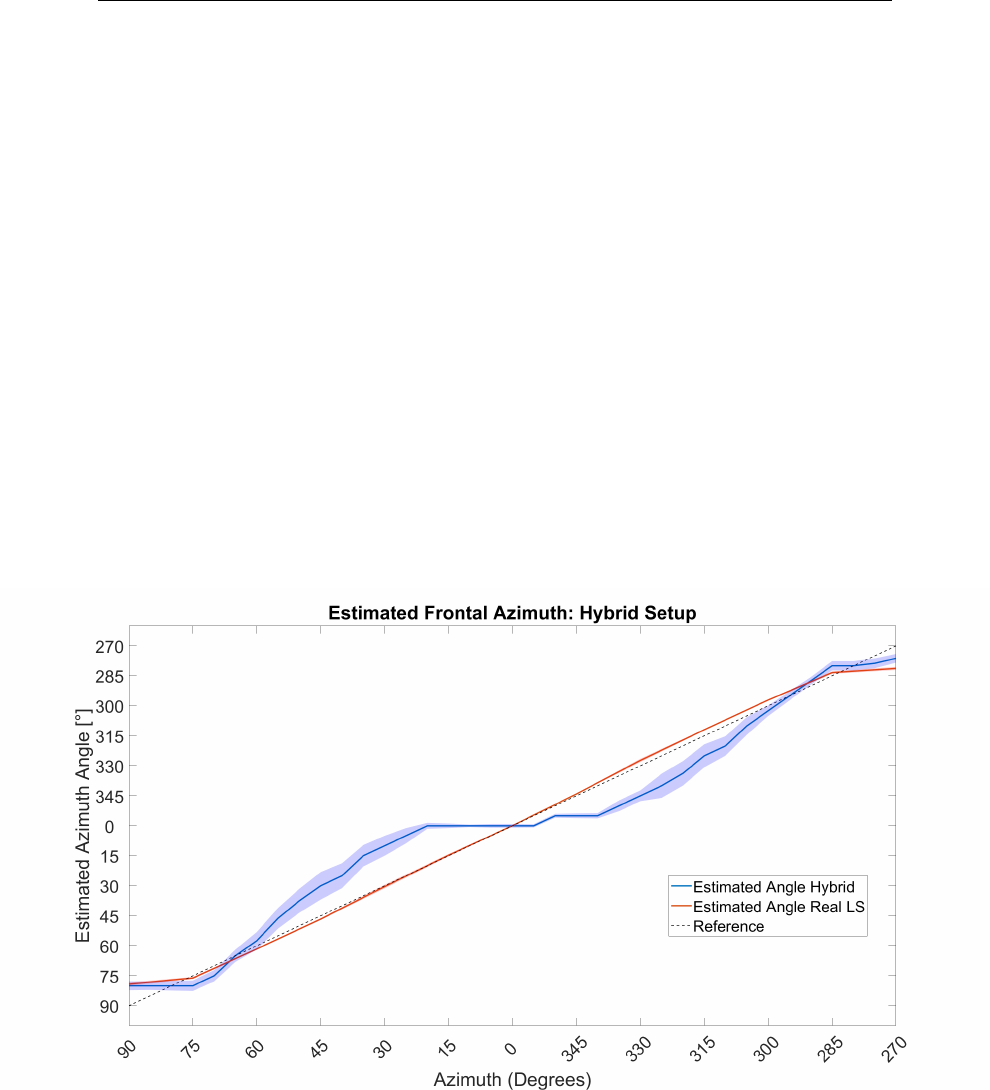

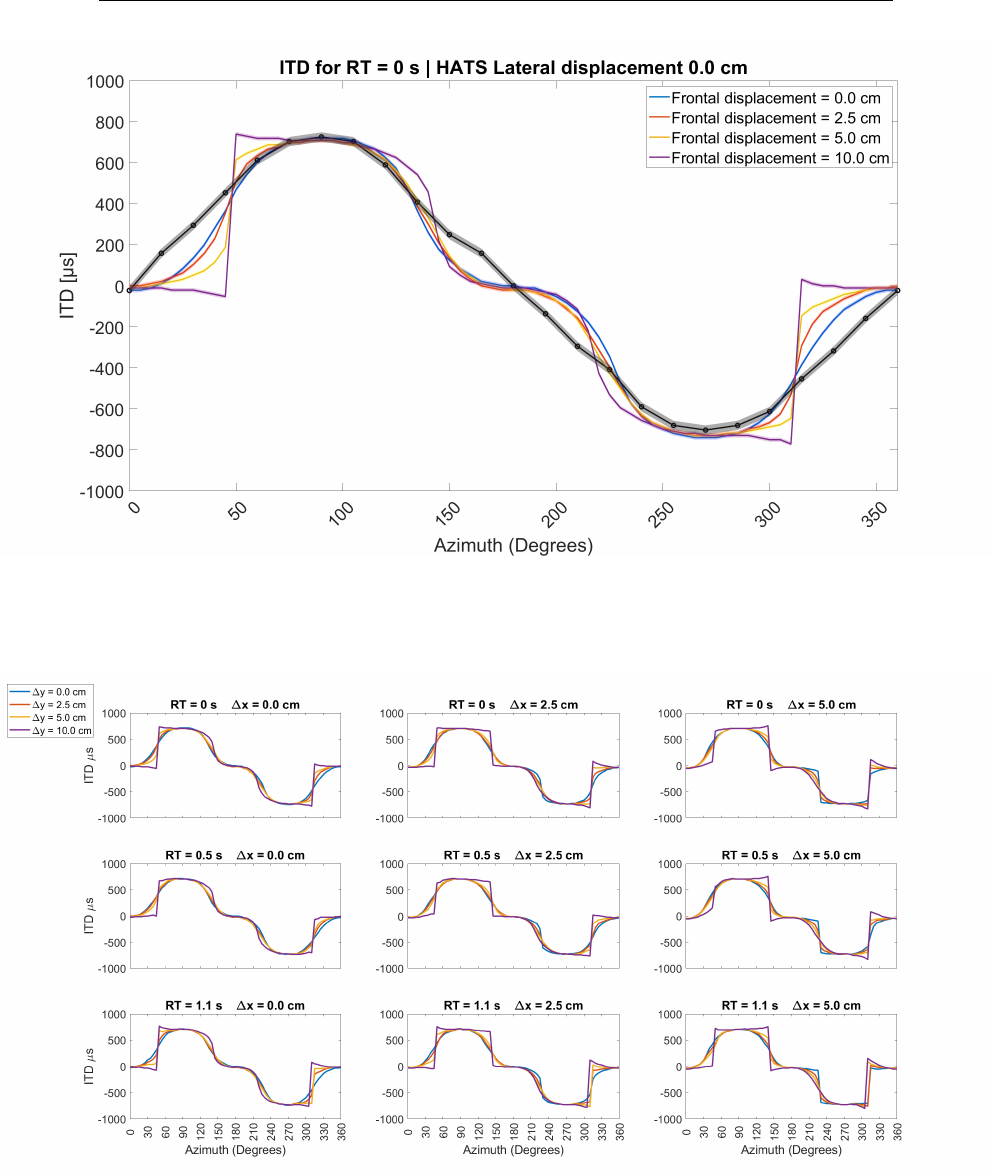

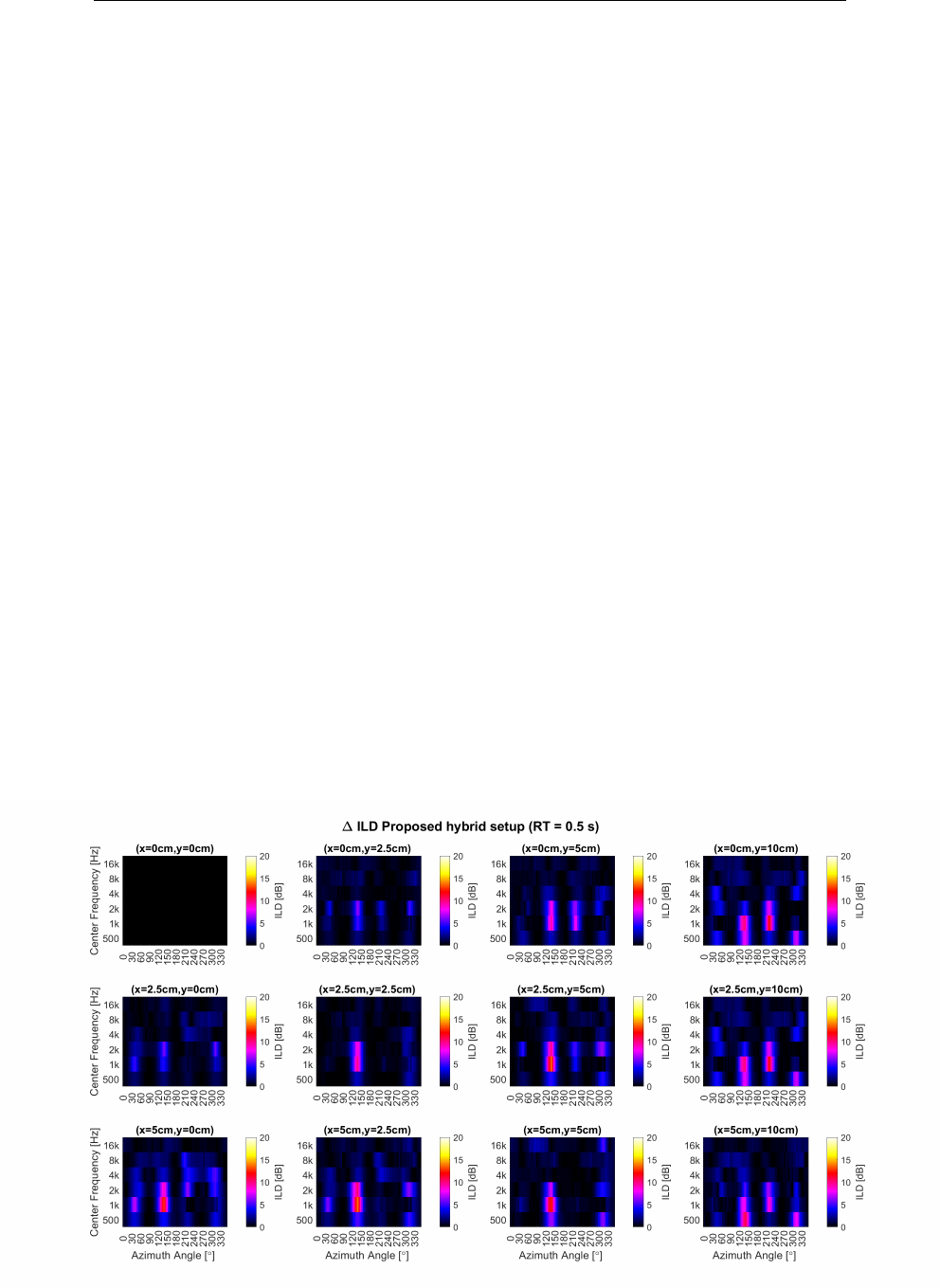

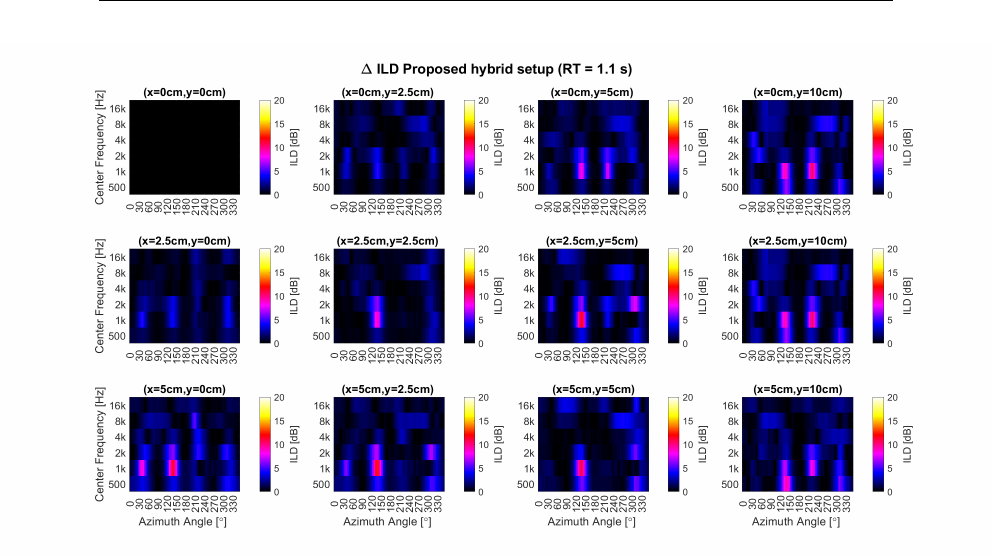

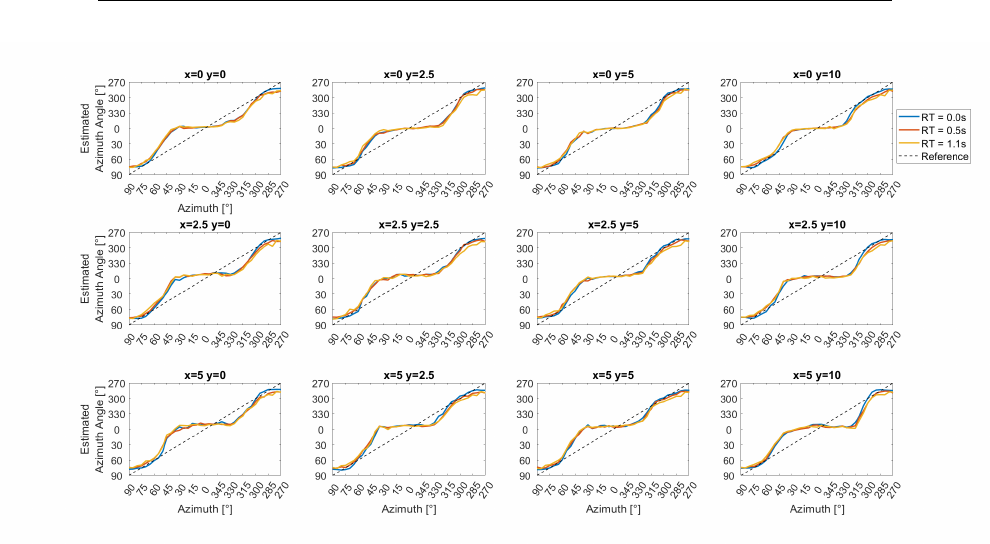

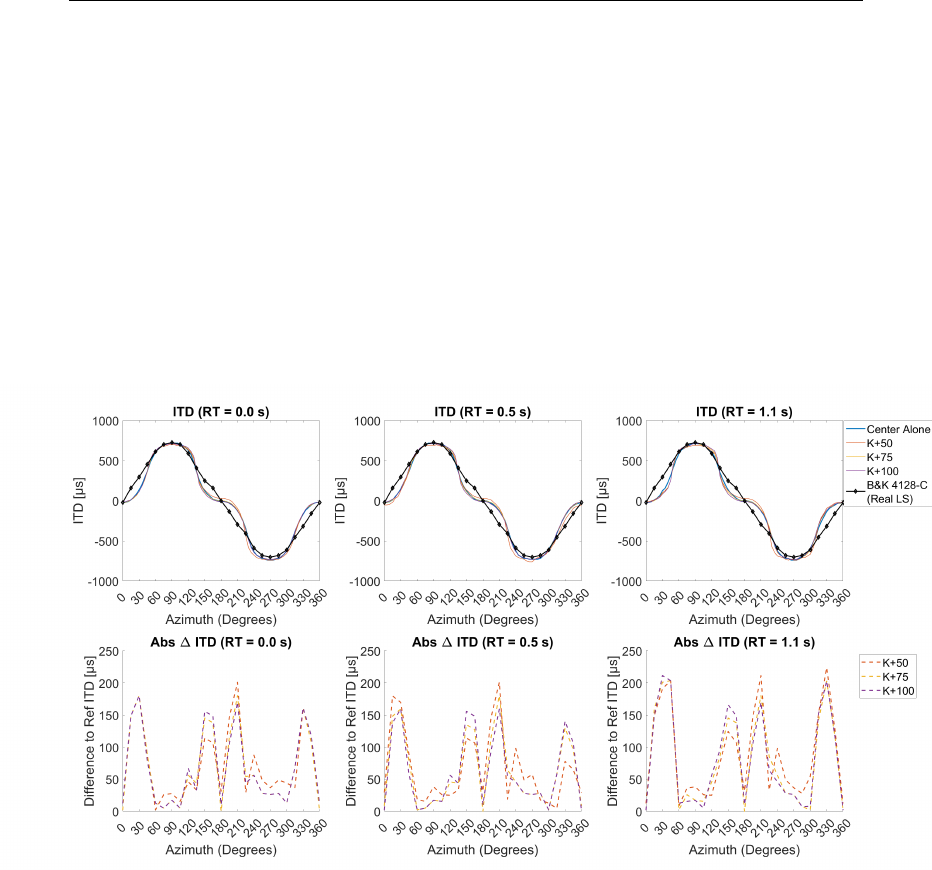

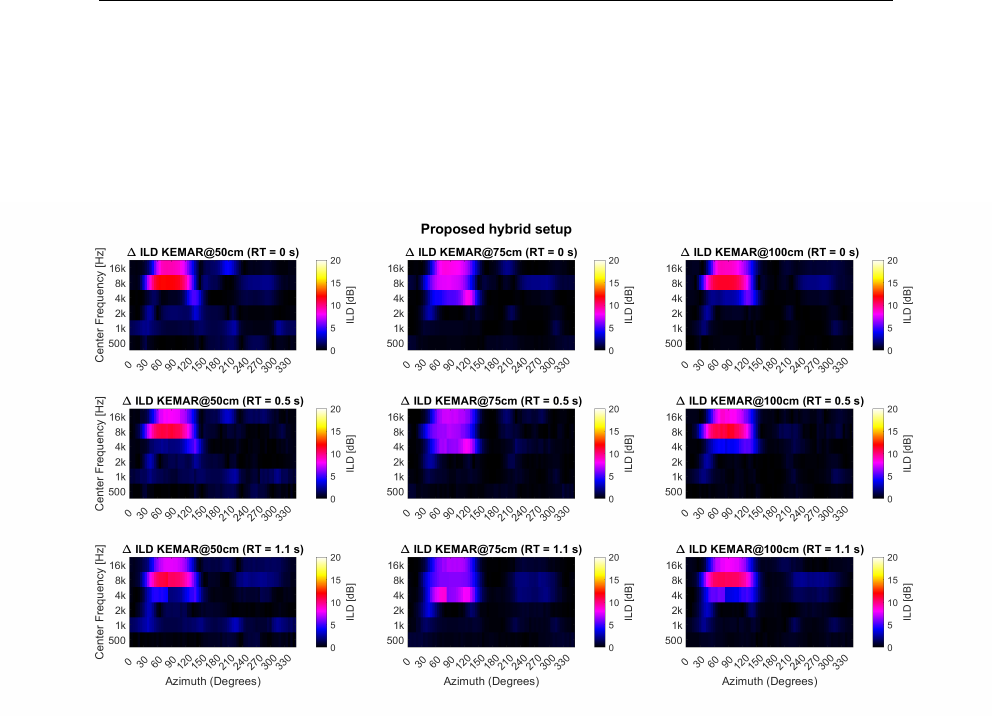

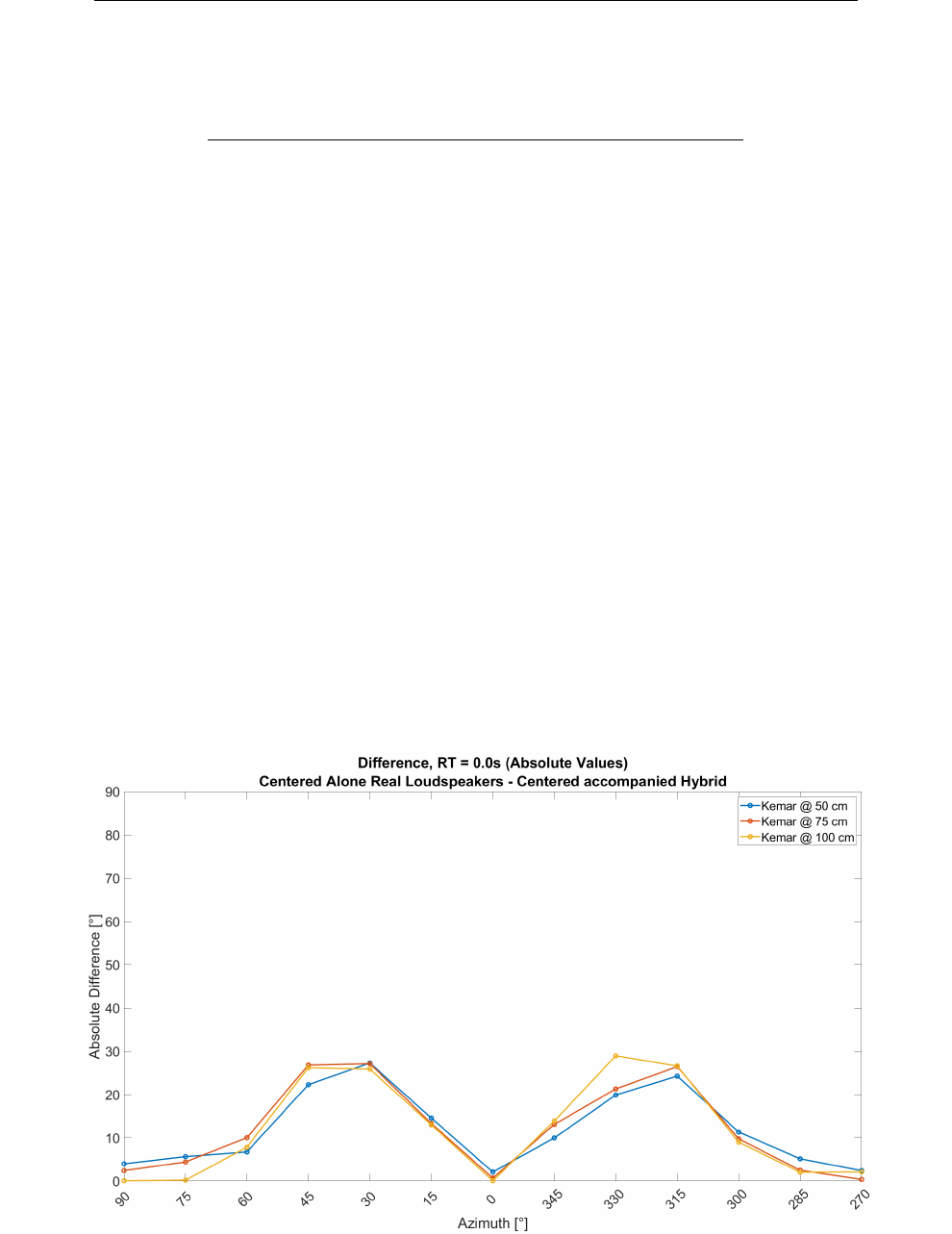

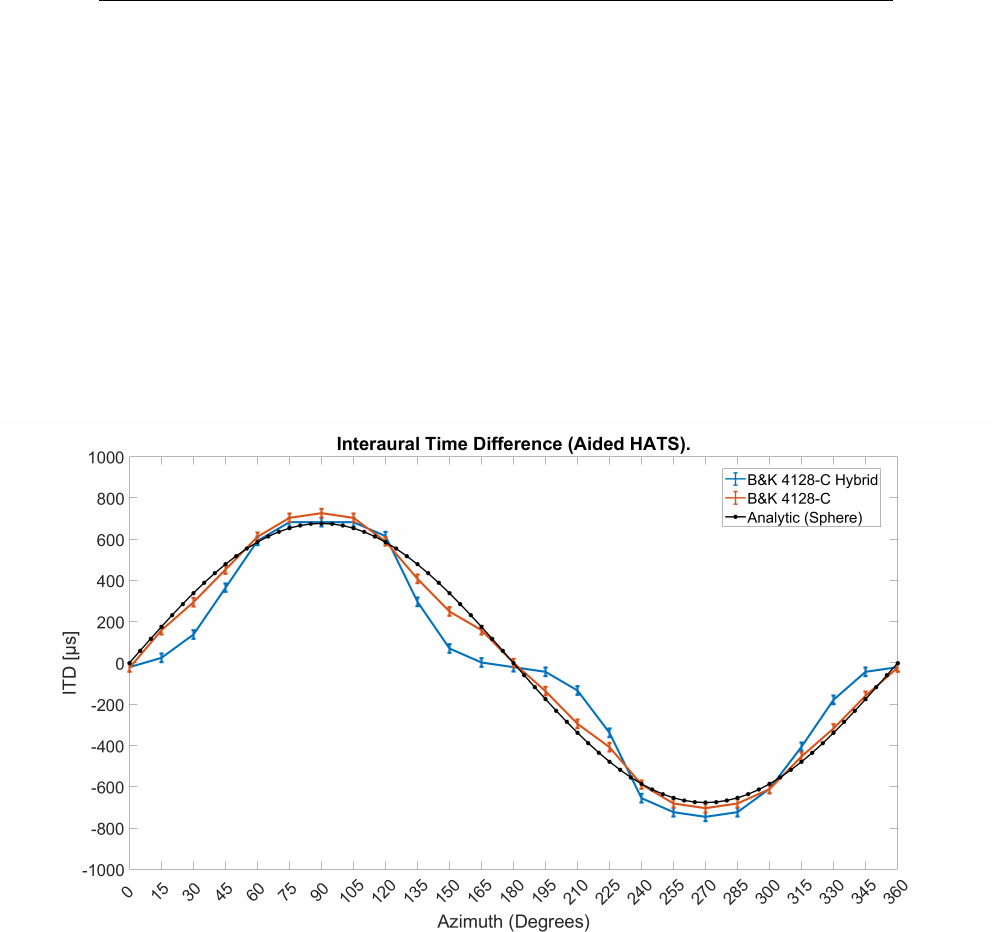

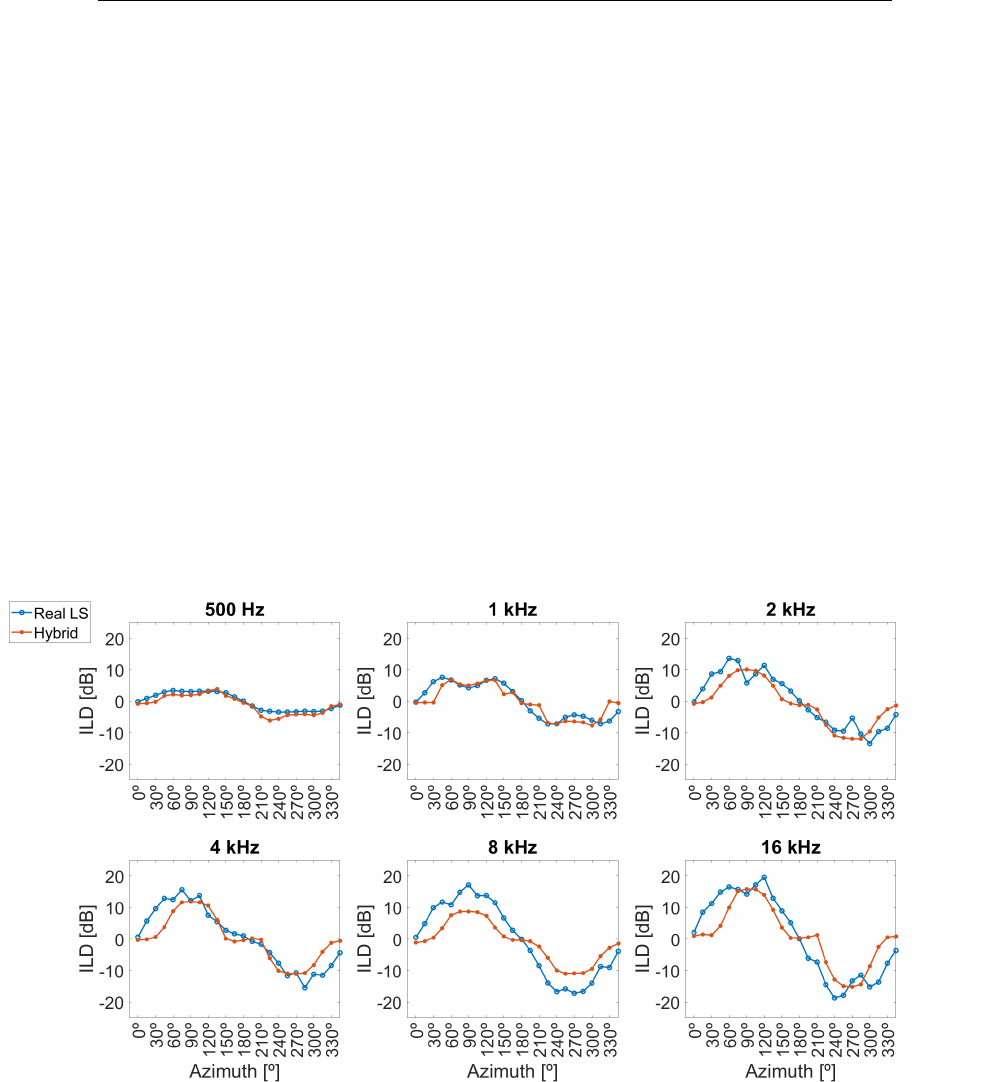

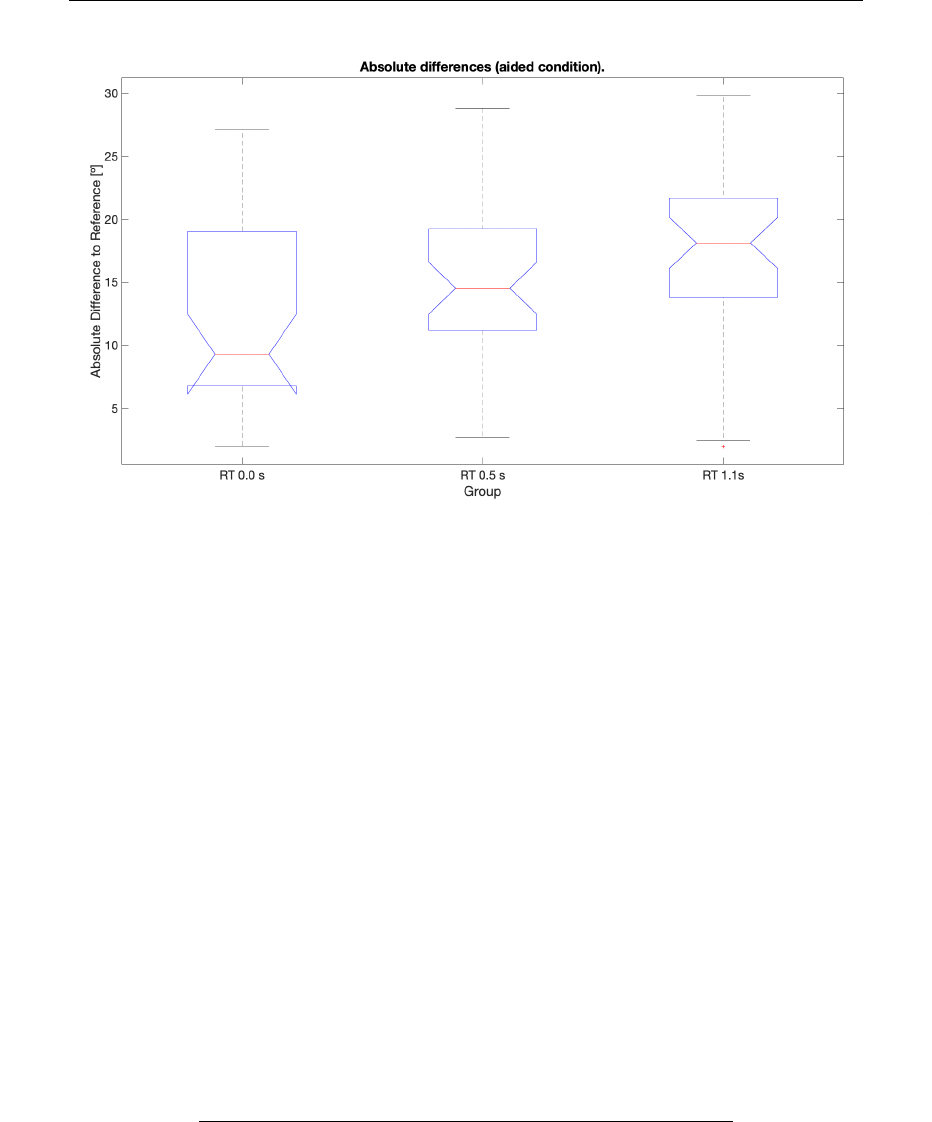

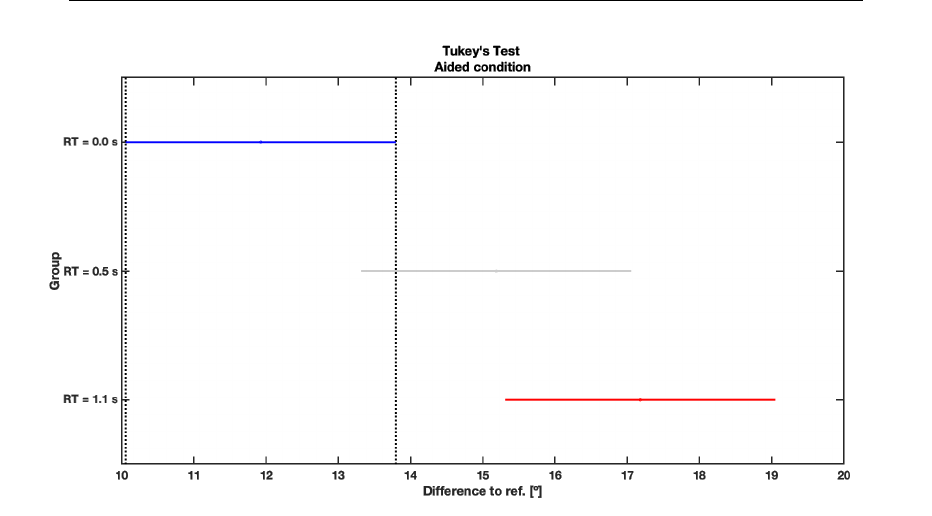

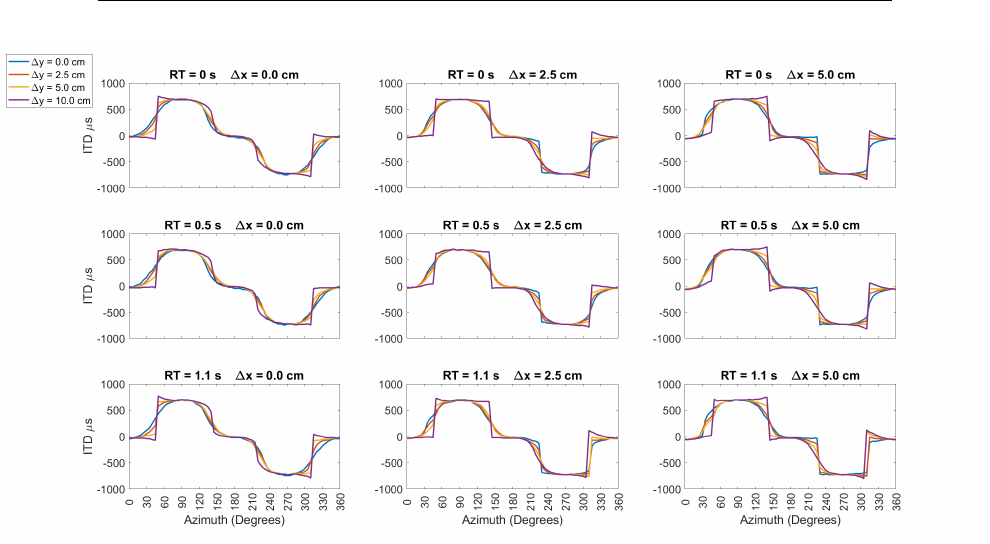

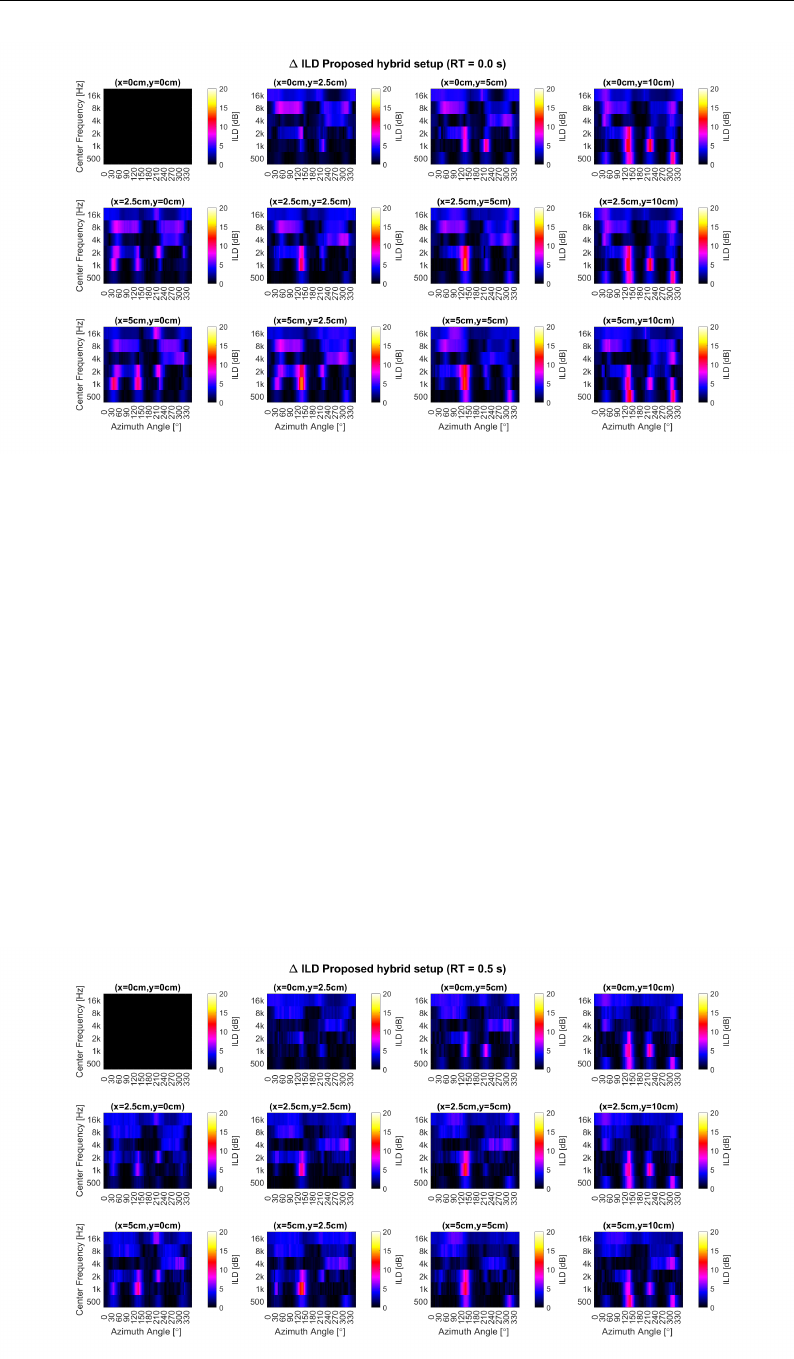

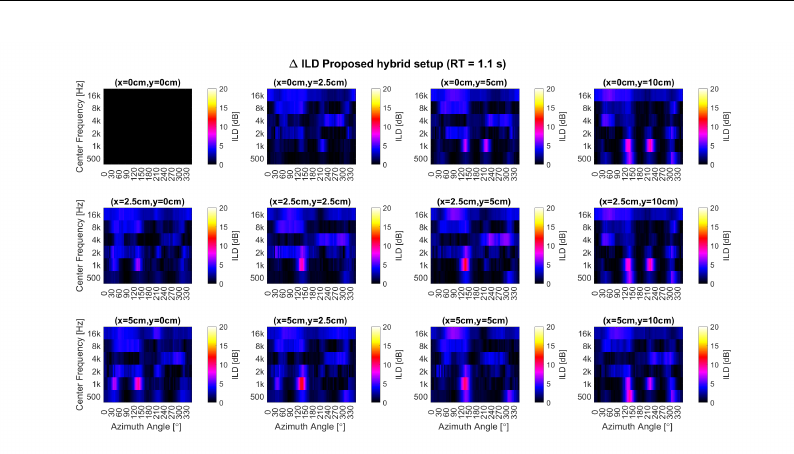

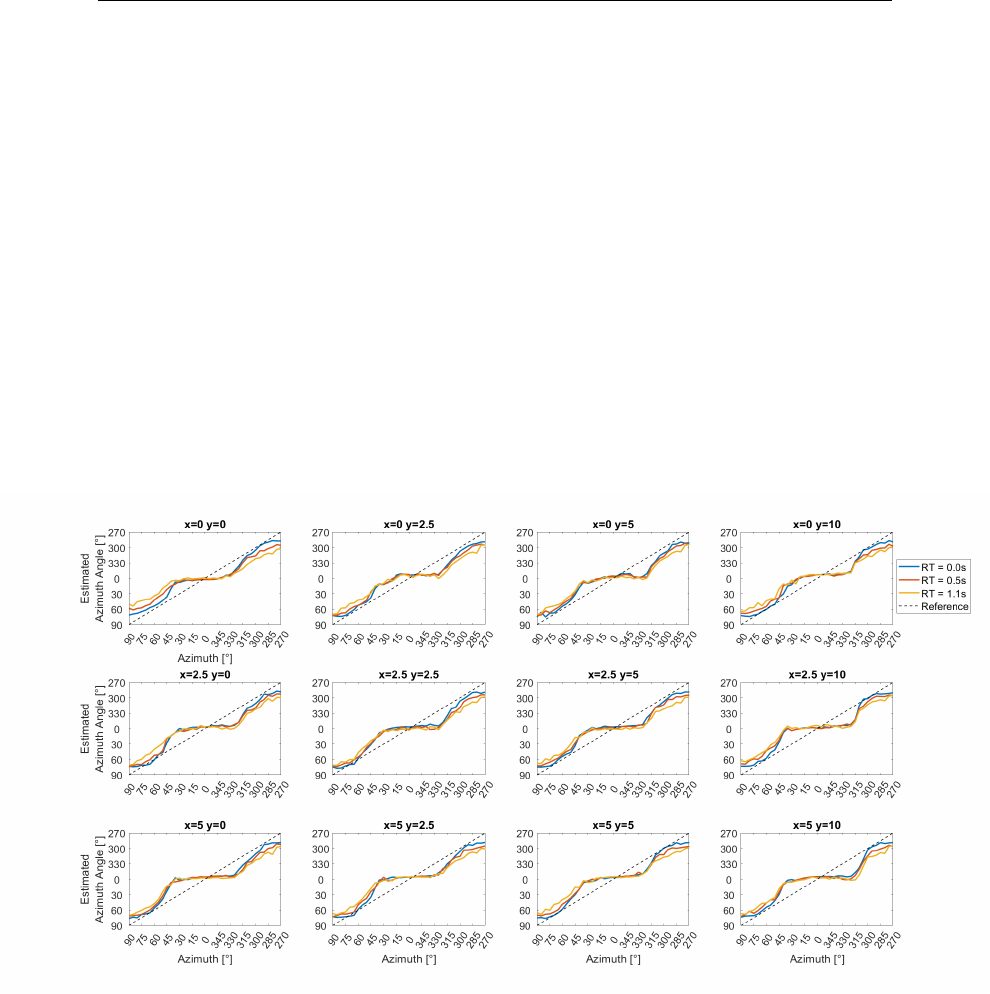

transaural (Adapted from Kang and Kim [131]).